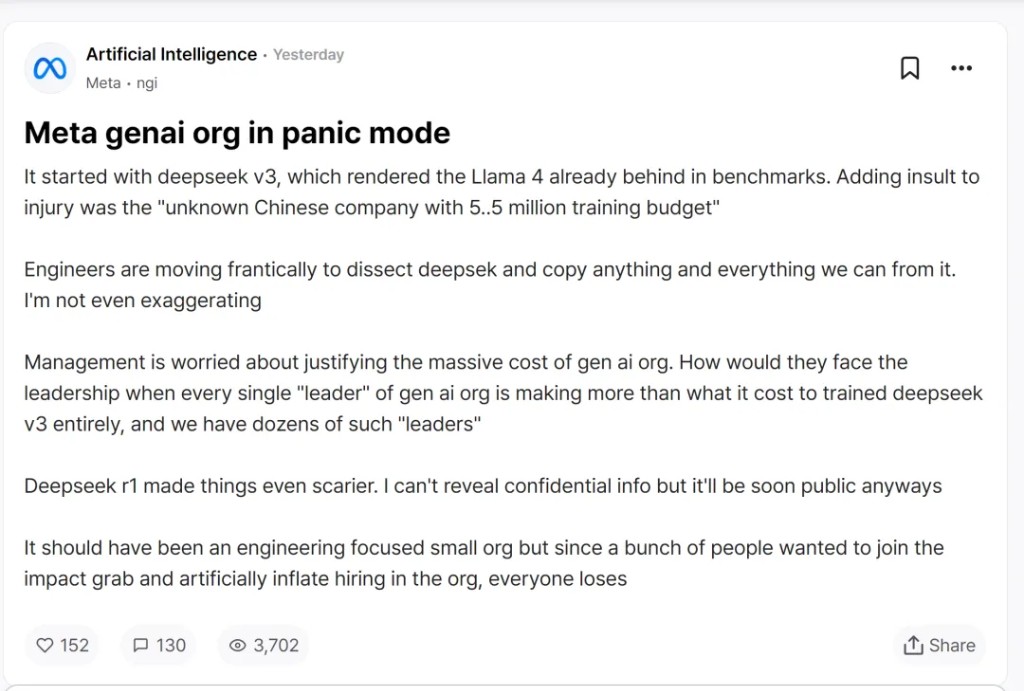

Is Meta in Panic? Internal Leaks: Analyzing the Crazy Copy of DeepSeek, High Budget Difficult to Explain

Meta is feeling panic due to the rapid development of DeepSeek. Internal employees revealed in anonymous communities that DeepSeek's low-cost and high-efficiency are causing budget justification issues for Meta's generative AI team. DeepSeek-V3 has surpassed Meta's Llama 4 in benchmark tests, and management is concerned about how to justify the costs of a large organization, especially when leadership salaries exceed the training costs of DeepSeek

"Engineers are frantically analyzing DeepSeek, trying to replicate anything possible from it."

The open-source large model DeepSeek is genuinely shocking American AI companies.

The first to fall into panic seems to be Meta, which also advocates for open source.

Recently, a Meta employee posted anonymously on the American workplace community teamblind. The post mentioned that the recent series of actions by the domestic AI startup DeepSeek has thrown Meta's generative AI team into panic, as the former's rapid advancement at low cost makes it difficult for the latter to justify its exorbitant budget.

The original text is as follows:

It all started with DeepSeek-V3, which has already left Llama 4 behind in benchmark tests. Worse still is that "unknown Chinese company with a training budget of 5.5 million."

Engineers are frantically analyzing DeepSeek, trying to replicate anything possible from it. This is no exaggeration.

Management is worried about how to justify the costs of the massive generative AI organization. When the salary of each "leader" in the generative AI organization is higher than the cost of training the entire DeepSeek-V3, and we have dozens of such "leaders," how will they face the higher-ups?

DeepSeek-R1 makes the situation even scarier. Although I cannot disclose confidential information, this will soon be made public.

This was supposed to be a small organization focused on engineering, but because many people wanted to get involved and share the pie, the recruitment scale of the organization was artificially inflated, resulting in everyone becoming a loser.

Original post link: https://www.teamblind.com/post/Meta-genai-org-in-panic-mode-KccnF41n

The DeepSeek-V3 and DeepSeek-R1 were released on December 26, 2024, and January 20, 2025, respectively Among them, DeepSeek-V3 mentioned at its release that the model surpassed other open-source models such as Qwen2.5-72B and Llama-3.1-405B in multiple evaluation scores, and performed on par with the world's top closed-source models GPT-4o and Claude-3.5-Sonnet.

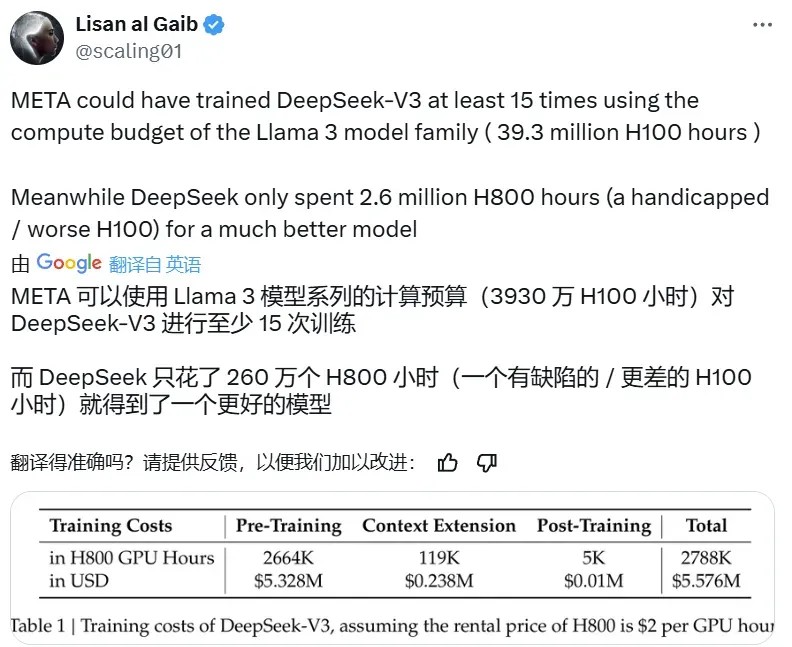

However, what is more noteworthy is that the training cost of this large language model with a parameter count of 671B was only $5.58 million. Specifically, its pre-training process used only 2.664 million H800 GPU Hours, and with the context expansion and post-training, the total was only 2.788 million H800 GPU Hours. In contrast, Meta's Llama 3 series models had a computational budget of up to 39.3 million H100 GPU Hours—such a computation amount is sufficient to train DeepSeek-V3 at least 15 times.

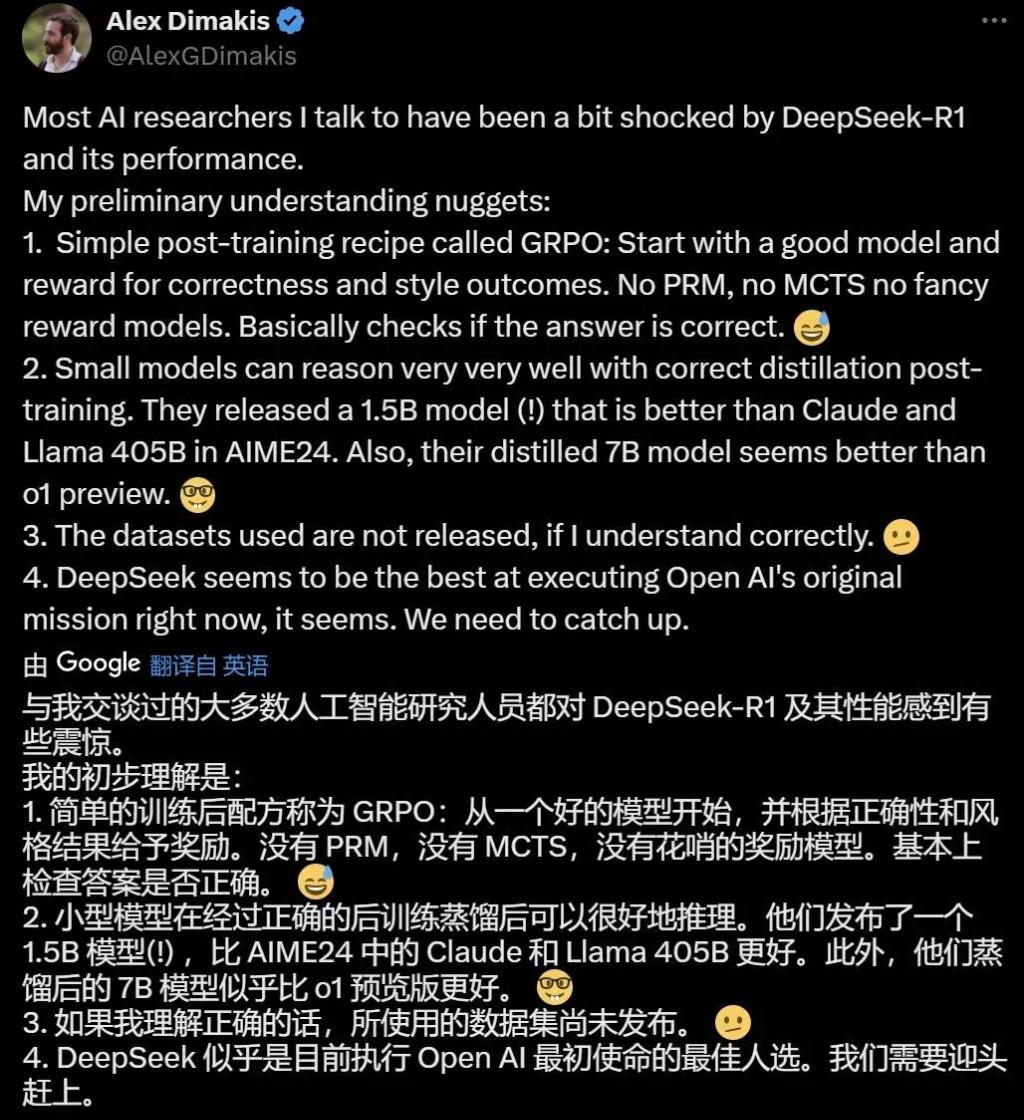

The recently released DeepSeek-R1 has even more powerful performance—its performance in tasks such as mathematics, coding, and natural language reasoning is comparable to the official version of OpenAI o1. Moreover, the model's weights were open-sourced simultaneously upon release. Many people exclaimed that DeepSeek is the true OpenAI. UC Berkeley professor Alex Dimakis believes that DeepSeek is now in a leading position, and American companies may need to catch up.

At this point, it is not difficult to understand why the Meta team is in a panic. If Llama 4, which is set to be released this year, does not have some solid capabilities, their status as the "light of open source" is in jeopardy.

Some pointed out that it is not just Meta that should be worried; OpenAI, Google, and Anthropic are also facing challenges. "This is a good thing; we can see in real-time the impact of open competition on innovation."

Others are concerned about Nvidia's stock price, stating, "If DeeSeek's innovation is real, do AI companies really need that many graphics cards?"

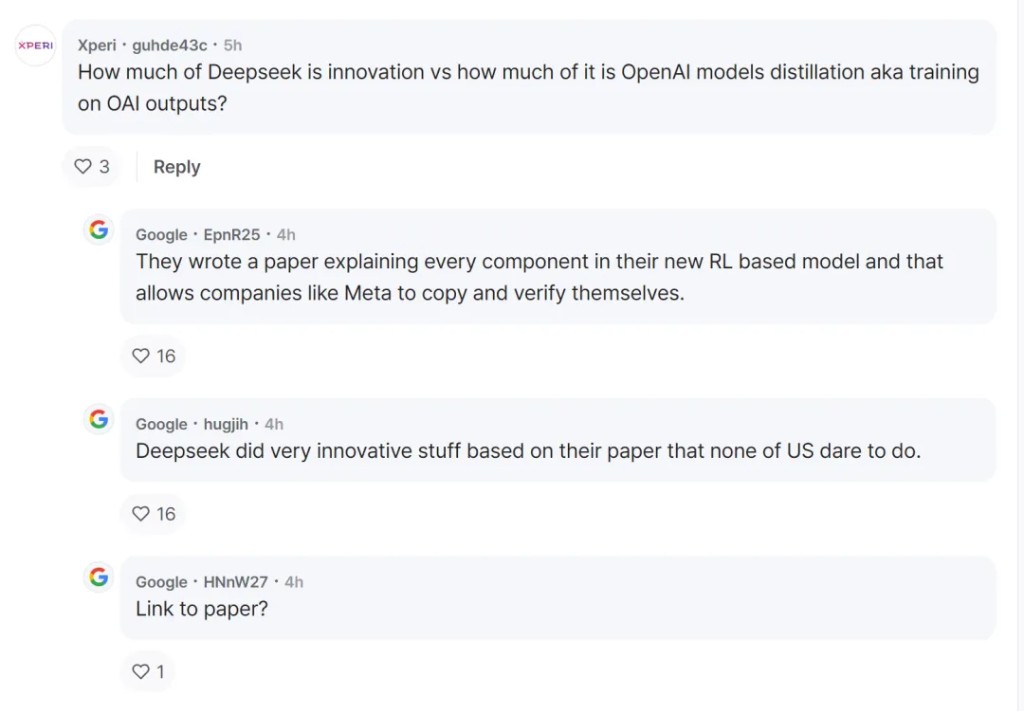

However, some people question whether DeepSeek succeeds through innovation or by distilling OpenAI's model. Some have replied that the answer can be found in their published technical report.

However, some people question whether DeepSeek succeeds through innovation or by distilling OpenAI's model. Some have replied that the answer can be found in their published technical report.

Currently, we cannot determine the authenticity of the post.

It is unclear how Meta will respond in the future, and what performance the upcoming Llama 4 will achieve.

Source: Machine Heart, original title: "Is Meta in Panic? Internal Leak: Analyzing and Copying DeepSeek Madly, High Budget Difficult to Explain"

Risk Warning and Disclaimer

The market has risks, and investment requires caution. This article does not constitute personal investment advice and does not take into account the specific investment goals, financial situation, or needs of individual users. Users should consider whether any opinions, views, or conclusions in this article are suitable for their specific circumstances. Investing based on this is at one's own risk