Sky-high expenses scare stock prices? Oracle's conference call urgently puts out the fire: "Customers bring their own chips" will save cash flow, "We are not going crazy with debt"

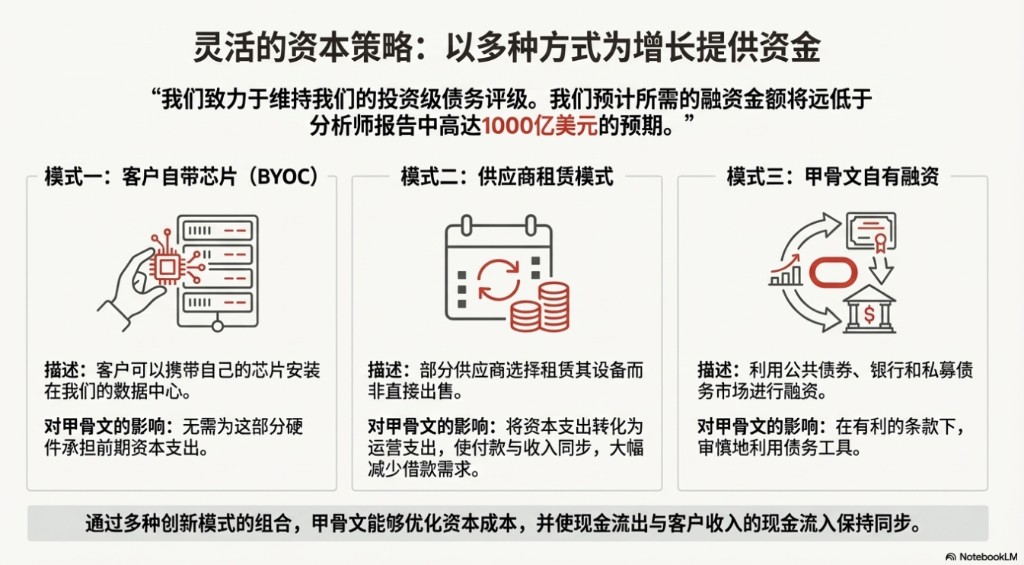

Despite Oracle holding over $500 billion in backlog orders, Wall Street was spooked by a $15 billion additional spending plan. The company launched an "unprecedented" new model: instead of having cloud vendors fully purchase hardware, customers (like OpenAI) are now bringing their own chips to the table. Company executives are eager to prove that "we don't need to go into debt as crazily as you might think."

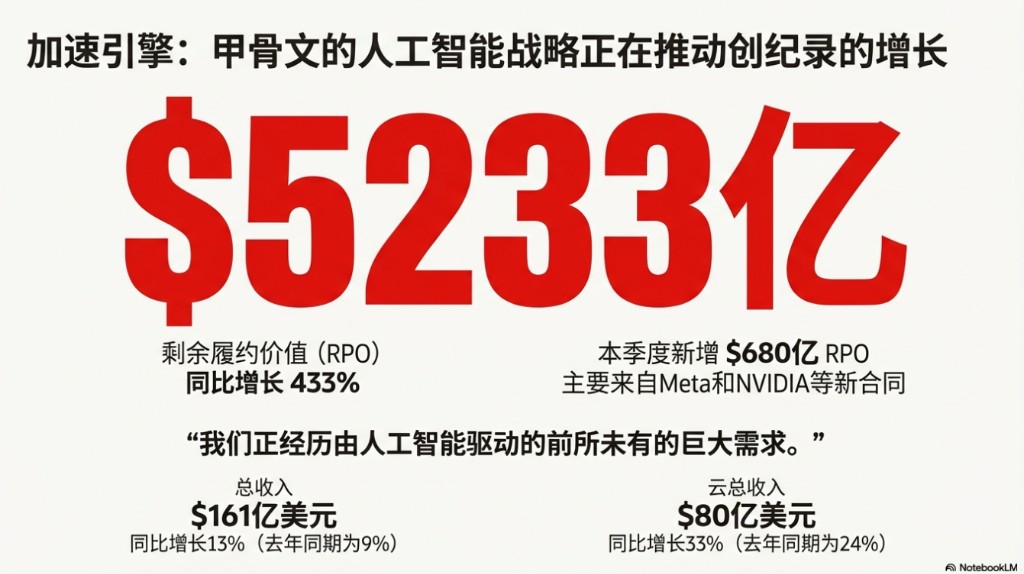

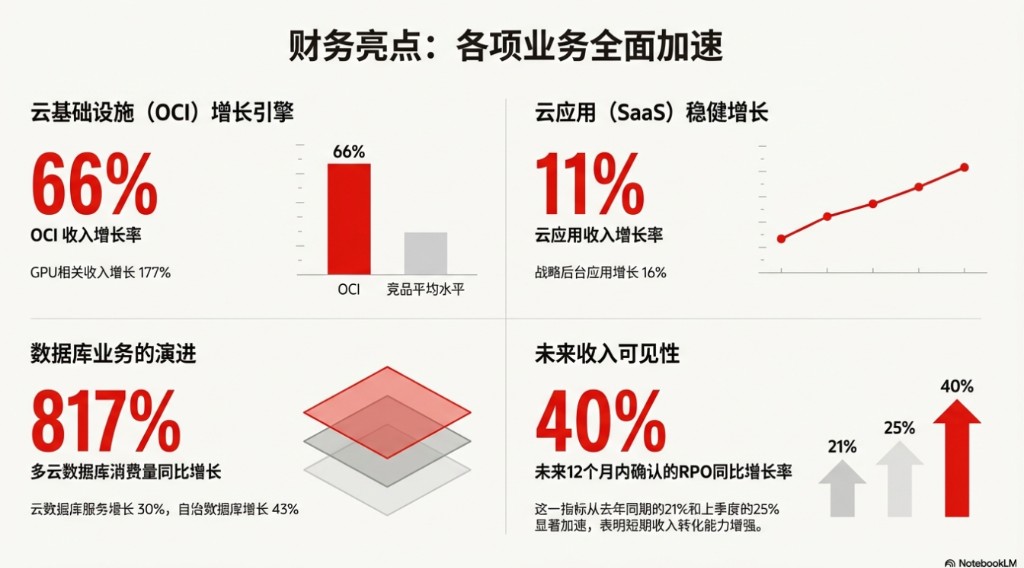

Against the backdrop of rampant AI bubble theories, Oracle released its latest financial report overnight, delivering impressive results with a 13% year-on-year revenue growth and a staggering 66% surge in cloud infrastructure (OCI).

However, the market's focus was entirely consumed by the company's aggressive capital expenditure plans. Although Oracle holds an astonishing $523.3 billion in contract backlog (RPO), the company announced it must invest an additional $15 billion in capital expenditures, a "burn rate" that directly frightened investors, leading to a more than 10% drop in after-hours stock prices.

In the face of market skepticism regarding a "debt black hole," the company's management team engaged in a fierce defensive battle during the conference call, attempting to prove that this was not blind expansion but rather a genuine demand "forced" by major clients.

During the call, the executive team, including co-founder Larry Ellison, spent considerable time explaining why this investment was safe and how Oracle could use "financial engineering" to avoid falling into a debt crisis.

Notably, Oracle's management also introduced a set of unprecedented defensive rhetoric in the cloud industry: instead of having cloud vendors fully purchase hardware, they would allow clients (such as OpenAI) to bring their own chips. This was not only to save cash flow but also revealed an industry insider insight—relying solely on renting expensive NVIDIA GPUs, even Oracle finds it difficult to earn substantial profits.

Key points from the conference call:

- Surge in capital expenditures (CapEx) triggers panic: The company announced that its FY2026 capital expenditure expectations would exceed the Q1 forecast by $15 billion (to a level of $50 billion), causing a more than 10% drop in after-hours stock prices.

- Management strongly defends debt issues, introduces "client brings own chips" model: In response to Wall Street's concerns about "hundreds of billions in debt" for infrastructure, Oracle executives emphasized that through the "client brings own chips" and leasing model, actual borrowing needs are "far lower than most people's model predictions," and they promised to maintain an investment-grade rating. Media commentary pointed out that "client brings own chips" is "unprecedented" in the cloud industry, fundamentally changing the traditional "buy and lease" business logic of cloud vendors.

- Risk management: Addressing client concentration risks, CEO Clay Magouyrk emphasized that its AI infrastructure has a high degree of "interchangeability," capable of transferring computing power from one client to another "within hours," demonstrating strong operational flexibility.

- Explosive growth in backlog orders (RPO): RPO reached an astonishing $523.3 billion, a year-on-year increase of 433%, primarily driven by computing power contracts from giants like Meta and NVIDIA.

- Larry Ellison's vision for the future of AI: Ellison believes the future of AI lies in "multi-step reasoning on private data," stating that Oracle's AI data platform can connect large models to all databases (including non-Oracle data), which is key to breaking down data silos

- Infrastructure (OCI) growth outpaces competitors: IaaS revenue grew 66%, GPU-related revenue surged 177%, and 96,000 NVIDIA GB200 chips were delivered.

“Not only do we need to build data centers, but we also need customers to bring their own chips”: Responding to the trillion-dollar debt rumors

After Oracle released its earnings report, the market's biggest concern was whether Oracle had enough money to support such large-scale AI infrastructure. Previously, analysts predicted that Oracle would need to borrow $100 billion to complete the construction.

In response, Oracle's cloud computing CEO Clay Magouyrk directly refuted this prediction when answering pointed questions from Deutsche Bank analysts about financing needs. He revealed the atypical financing model currently adopted by Oracle:

“We have read many analyst reports predicting that we need to raise up to $100 billion... Based on what we currently see, the amount of financing we need will be less than, and even significantly less than, that figure.”

Clay further explained the intricacies: Oracle is adopting a “Bring Your Own Chips” model.

This is an unprecedented move in the cloud service industry. Traditional models like Amazon Web Services or Google Cloud involve cloud vendors purchasing servers and renting them to customers. However, according to analysis from The Information, due to the diminishing profit margins from simply renting out expensive NVIDIA chips, Oracle is attempting to shift this enormous capital expenditure risk.

In this model, customers like Meta or OpenAI purchase expensive GPUs themselves and install them in Oracle's data centers.

“In these models, Oracle clearly does not need to incur any upfront capital expenditures.” Clay added. Additionally, some suppliers are also willing to provide equipment on a rental basis rather than selling it, allowing Oracle to “synchronize payments with receipts,” greatly alleviating cash flow pressure.

The secret of OpenAI and the Texas supercluster?

This new model perfectly corroborates previous market rumors. Reports had indicated that OpenAI was negotiating to lease NVIDIA chips in Texas rather than purchasing them outright, while Oracle mentioned in its conference call that progress on its supercluster in Abilene, Texas was going smoothly.

The market speculates that OpenAI may be one of the first core practitioners of Oracle's “customer brings/rents chips” model. If this model proves successful, Oracle will transition from being a heavy asset “landlord + furniture dealer” to a relatively light asset “sub-landlord,” which will greatly improve its long-term return on invested capital (ROIC) Through this "financial engineering," Oracle is attempting to capitalize on the AI wave without damaging its balance sheet.

$15 Billion "Overrun": Because "Orders Are Coming Too Fast"

Regarding the sudden increase of $15 billion in capital expenditure expectations, CFO Doug Kehring explained it as a "sweet trouble."

He pointed out that RPO (Remaining Performance Obligations) increased by $68 billion in Q2, most of which are urgent demands that need to be converted into revenue in the short term.

"We only accept customer contracts when all components are in place, and we are confident we can deliver on time and with reasonable profit." Clay Magouyrk emphasized that this money is not for hoarding graphics cards, but because customers have already signed and paid deposits, eagerly waiting for computing power to go live.

Too Dependent on OpenAI? Oracle: Infrastructure Can Be Converted in "Hours"

In addition to funding issues, investors are also concerned about Oracle's reliance on a few large clients. If one of these major clients cannot pay or changes plans, what will happen to the customized data centers built for them?

CEO Clay Magouyrk provided an unexpected answer: Oracle's AI infrastructure has extremely high flexibility and "fungibility." He stated that the cloud provided for AI clients is "exactly the same" as that provided for all other clients, thanks to the company's early strategic choices in technologies such as bare-metal virtualization and hardware security erasure.

"How long does it take to transfer capacity from one client to another? The answer is 'in hours,'" Magouyrk said. He further explained that when the company gains idle capacity, due to its large customer base of over 700 AI clients and strong demand, "it will quickly be allocated and deployed."

This rapid conversion capability means that Oracle's infrastructure investments are not deeply tied to a single client, significantly reducing the potential risks associated with customer concentration.

Larry Ellison's Ambition: AI Is Not Just Chatting, It's "Private Data Inference"

When analysts questioned Oracle's moat in the AI ecosystem, the company's founder and CTO Larry Ellison took the microphone. He did not talk about computing power but rather about data. He believes that the next battlefield for AI will shift from "public data training" to "private data inference."

"Training AI models on public data is the largest and fastest-growing business in history. But inferring on private data will be a larger and more valuable business." Ellison stated.

He painted a scenario where enterprises no longer need to move data around. Oracle's AI data platform can allow models like OpenAI and Google Gemini to directly "connect" to the enterprise's private databases, ERP systems, or even competitors' data storage

"Through the lens of AI, you can quickly see everything happening in the business in real-time." Ellison summarized his vision with this statement, suggesting that Oracle's core value lies in its control over the core data of global enterprises (ERP/database), a territory that AWS and Azure find hard to reach.

OCI Growth of 66%: More Than Just AI

Although the market is worried about an AI bubble, Oracle believes that growth is comprehensive. OCI (Oracle Cloud Infrastructure) generated $4.1 billion in revenue this quarter, a year-on-year increase of 66%, far exceeding Amazon AWS and Microsoft Azure.

"Our cloud infrastructure business continues to grow much faster than our competitors." Kehring pointed out that not only did the GPU business grow by 177%, but database services and multi-cloud consumption are also surging.

In the face of a sharp decline in stock prices, the core message conveyed by management is clear: the market's panic stems from a misunderstanding of traditional infrastructure models, while Oracle is leveraging its unique data position and flexible financing methods to make a high-leverage but "secured" bet in the AI wave.

The transcript of Oracle's Q2 fiscal year 2026 earnings call is as follows (assisted by AI tools):

Meeting Name: Oracle Q2 Fiscal Year 2026 Earnings Call

Meeting Time: December 10, 2025

Speaker Session

Operator:

Hello everyone, thank you for your patience. I’m Tiffany, and I will be your operator today. Now, welcome to Oracle Corporation's Q2 Fiscal Year 2026 Earnings Call. All lines have been muted to prevent background noise. After the speakers' remarks, we will enter the Q&A session. (Operator instructions) Now, I will turn the call over to the head of investor relations, Ken Bond. Sir, please go ahead.

Ken Bond (Head of Investor Relations):

Thank you, Tiffany. Good afternoon, everyone, and welcome to Oracle's Q2 Fiscal Year 2026 Earnings Call. Joining us today are Chairman and Chief Technology Officer Larry Ellison; Chief Executive Officer Mike Sicilia; Chief Executive Officer Clay Magouyrk; and Chief Financial Officer Doug Kehring.

Copies of the press release and financial tables, including GAAP and non-GAAP reconciliation tables, other supplemental financial information, and a list of recent customers who have purchased Oracle Cloud Services or gone live on Oracle Cloud, are available on our investor relations website Just a reminder, today's discussion will include forward-looking statements, and we will discuss some important factors related to the business. These forward-looking statements are also subject to risks and uncertainties that could cause actual results to differ materially from those stated today. Therefore, we remind you not to place undue reliance on these forward-looking statements and encourage you to review our latest reports, including the 10-K and 10-Q forms and any applicable amendments. Finally, we want to clarify that we have no obligation to update our results or these financial forward-looking statements even if circumstances change.

Before we take questions, we will first make some prepared remarks, and now I will turn the meeting over to Mike—sorry, Doug.

Doug Kehring (Chief Financial Officer):

No, actually it's Kehring, not Mike. Thank you, Ken. I’m Doug. Regarding the data we are about to present, the following points apply to the second quarter results and third quarter guidance. First, we will discuss financial performance using constant currency growth rates, as this is how we manage the business. Second, unless otherwise noted, we will present data on a non-GAAP basis. Finally, regarding exchange rates, they had a positive impact of 1% on second quarter revenue and a positive impact of $0.03 on earnings per share.

For the third quarter, assuming exchange rates remain at current levels, they should have a positive impact of 2% to 3% on revenue and a positive impact of $0.06 on earnings per share, depending on rounding. Regarding second quarter performance, we completed another quarter of excellent execution. Remaining Performance Obligations (RPO) reached $523.3 billion at the end of the quarter, up 433% year-over-year, and increased by $68 billion since the end of August, primarily due to contracts signed with companies like Meta and NVIDIA, as we continue to diversify our customer backlog.

RPO expected to be recognized in the next 12 months is up 40% year-over-year, compared to 25% last quarter and 21% in the same period last year. Total cloud revenue, including applications and infrastructure, grew by 33% to $8 billion, significantly accelerating from the 24% reported last year. Cloud revenue now accounts for half of Oracle's total revenue. Cloud Infrastructure (OCI) revenue was $4.1 billion, up 66%, with GPU-related revenue skyrocketing by 177%. Oracle's cloud infrastructure business continues to grow at a pace far exceeding competitors. Cloud database service revenue grew by 30%, with autonomous database revenue up 43%, and multi-cloud consumption surged by 817%. Cloud application revenue was $3.9 billion, an increase of 11%. Our strategic back-end application revenue was $2.4 billion, up 16%.

As we complete the integration of industry cloud applications and Fusion cloud applications under one sales organization across global regions, we are seeing increasing cross-selling synergies, which we expect will drive higher growth rates for cloud applications in the future. In total, this quarter's total revenue was $16.1 billion, an increase of 13%, up from the 9% growth reported in the second quarter of last year, continuing the trend of accelerating total revenue growth Operating revenue grew by 8% to $6.7 billion. Non-GAAP earnings per share were $2.26, an increase of 51%, while GAAP earnings per share were $2.10, an increase of 86%. In this quarter, we recognized a pre-tax gain of $2.7 billion from the sale of our Ampere stake.

Turning to cash flow. Operating cash flow for the second quarter was $2.1 billion, while free cash flow was negative $10 billion, and capital expenditures (CapEx) were $12 billion, reflecting investments made to support our accelerated growth. It is important to clarify that the vast majority of our capital expenditure investments are directed towards revenue-generating equipment for data centers, rather than for land, buildings, or power facilities covered by leases. Oracle will not pay these lease costs until the constructed data centers and supporting facilities are delivered to us. Instead, equipment capital expenditures are purchased very late in the data center production cycle, allowing us to quickly convert cash outflows into earned revenue when providing cloud services to contracted and committed customers.

Regarding funding our growth, we have multiple sources of funding within our debt structure in the public bond, bank, and private debt markets. Additionally, there are other financing options, such as customers potentially bringing their own chips to install in our data centers, and suppliers possibly leasing their chips instead of selling them to us. Both options allow Oracle to synchronize payments with receipts and keep our borrowing levels significantly below most model forecasts. As a fundamental principle, we expect and are committed to maintaining our investment-grade credit rating.

Turning to guidance. First, let's discuss the impact of the increased RPO in the second quarter on our future performance. The vast majority of these bookings involve opportunities where we have available capacity, meaning we can convert the increased backlog into revenue more quickly. As a result, we now expect an additional $4 billion in revenue for fiscal year 2027. We are maintaining our full-year revenue expectation of $67 billion for fiscal year 2026. However, given the increase in RPO that can be quickly monetized starting next year, we now expect capital expenditures (CapEx) for fiscal year 2026 to be approximately $15 billion higher than our forecast after the first quarter.

Finally, we are confident that our customer backlog is at a healthy level and that we have the operational and financial strength to execute successfully. While we continue to experience tremendous and unprecedented demand for cloud services, we will only pursue further business expansion if our profitability requirements are met and capital terms are favorable. Specifically for the guidance for the third quarter, total cloud revenue is expected to grow by 37% to 41% at constant currency, and by 40% to 44% in U.S. dollars. Total revenue is expected to grow by 16% to 18% at constant currency, and by 19% to 21% in U.S. dollars. Non-GAAP earnings per share are expected to grow by 12% to 14%, estimated between $1.64 and $1.68 at constant currency, and to grow by 16% to 18%, estimated between $1.70 and $1.74 in U.S. dollars. Next, I will hand the meeting over to Clay

Clay Magouyrk (CEO):

Thank you, Doug. Our infrastructure business is accelerating year-over-year growth, reaching 66%. Everyone is well aware of the strong demand for AI infrastructure, but multiple segments of OCI are also driving this accelerated growth rate, including cloud-native, Dedicated Regions, and multi-cloud. The diversity of our functionalities within infrastructure sets us apart from emerging cloud vendors (Neoclouds) in AI infrastructure. Our unique combination of infrastructure and applications differentiates us from other hyperscalers. We have ambitious and achievable goals for capacity delivery on a global scale. OCI currently operates 147 customer-facing real-time regions and plans to build an additional 64 regions. In the last quarter, we delivered nearly 400 megawatts of data center capacity to customers. The GPU capacity we delivered this quarter is also 50% more than in the first quarter. Our supercluster in Abilene, Texas, is progressing well, having delivered over 96,000 NVIDIA Grace Blackwell GB200. We also began delivering AMD MI355 capacity to customers this quarter. Our capacity delivery speed continues to accelerate.

We continue to see strong demand for AI infrastructure for training and inference. Before accepting customer contracts, we follow a very strict process. This process ensures that we have all the necessary elements to achieve customer success with a reasonable profit margin for our business. We analyze the land and power for data center construction, component supply (including GPUs, networking equipment, and optical devices), labor costs for various construction phases and low-voltage engineering, engineering capabilities for design, construction, and operation, required revenue and profitability, and capital investment. Only when all these components are in place do we accept customer contracts, confident that we can deliver the highest quality service on time.

As Doug mentioned, this quarter we signed an additional $68 billion in RPO. These contracts will quickly add revenue and profit to our infrastructure business. We continue to carefully evaluate all future infrastructure investments, only proceeding with investments when all necessary components align to ensure profitable delivery for customers.

The holiday season is a peak period for many retail and consumer customers. OCI is responsible for providing the safest, highest-performing, and most available infrastructure to support the scale required by these customers. Uber is now running over 3 million cores on OCI, powering its record traffic during this year's Halloween. Team Move expanded to nearly 1 million cores on Black Friday and Cyber Monday. Additionally, we supported thousands of other customers through our retail and other applications during their biggest and most successful holidays. OCI's capabilities and services are continually expanding. We recently launched Acceleron, providing enhanced networking services for all OCI customers, as well as other services like AI agent services. However, we cannot provide all services ourselves; we rely on a rapidly expanding partner community to deliver the best experience on OCI We have added new AI models from Google, OpenAI, and xAI to ensure our customers have the latest and greatest AI capabilities. Driven by partners like Broadcom and Palo Alto, our market consumption has increased by 89% year-over-year.

These partners are driving OCI consumption by building SaaS businesses on OCI. Palo Alto has launched their SASE and Prisma Access platforms on OCI, while Cyber Region and Newfold Digital continue to rapidly expand their businesses. These partnerships enrich our ecosystem, benefiting our customers, and as partners build solutions on our infrastructure, this growth directly translates into more OCI growth.

Demand for Oracle Database Services across all clouds is increasing. Multi-cloud database consumption has grown by 817% year-over-year. This quarter, we launched 11 multi-cloud regions, bringing our real-time regions on AWS, Azure, and GCP to 45, with plans to add another 27 in the coming months. We are seeing increasing customer demand, with confirmed pipeline values in the billions of dollars.

This quarter, we launched two significant initiatives for multi-cloud. The first is Multi-cloud Universal Credits, allowing customers to commit to using Oracle Database Services at once and use them across any cloud with the same pricing and flexibility. The second is our Multi-cloud Channel Reseller Program, enabling customers to procure Oracle Database Services through their preferred channel partners.

We also launched nine services across different clouds, such as Oracle Autonomous AI Lakehouse. This optimal combination of services, universal availability, consistent and straightforward pricing and procurement, along with partner support, is accelerating the adoption of Oracle Database Services across our customer base. OCI is the only complete cloud service available for individual customers. We launched Dedicated Region 25, which provides the full capabilities of OCI in a tiny footprint of just 3 racks. OCI is also the only cloud that enables partners to become cloud providers themselves through our Alloy program, with our new footprint available for all Alloy providers.

Consumption of Dedicated Regions and Alloy has increased by 69% year-over-year. We launched a Dedicated Region for IFCA Group in Oman, and NTT Data and SoftBank each launched an Alloy region this quarter. This brings our real-time Dedicated Regions to 39, with plans to add another 25.

In summary, the four segments of our infrastructure business are growing at an astonishing rate. This will help accelerate our infrastructure revenue growth in the coming quarters. Customers choose OCI for its performance-optimized architecture, relentless focus on security, consistently low and predictable pricing, and unparalleled depth in database and enterprise integration These priorities have been our strategy from the very beginning and are the driving force behind this growth. OCI is in a constant state of transformation, and you can see the value it brings to customers. When we combine our commitment to delivering the best performance, efficiency, and security with the growing demand we see from cloud infrastructure service customers, I am incredibly excited about the developments ahead.

Speaking of this. (Unclear)

Larry Ellison (Co-founder, Chairman of the Board, and CTO):

Thank you very much, Clay. For many years, Oracle has developed software in three important areas: databases, applications, and Oracle Cloud. We use AI to create our database software, and our autonomous software eliminates manual labor and human errors, thereby reducing operational costs and making our systems faster, more reliable, and more secure.

Now, with the development of Oracle AI Database and Oracle AI Data Platform, we are bringing together all three layers of our software stack to address another very important issue. Enabling the latest and most powerful AI models to perform multi-step reasoning on all private enterprise data while keeping that data private and secure. Training AI models on public data is the largest and fastest-growing business in history. And AI models that perform reasoning on private data will be an even larger and more valuable business.

The Oracle Database contains most of the world's high-value private data. Oracle Applications also hold a significant amount of highly valuable private data. Oracle Cloud includes all top AI models, such as OpenAI ChatGPT, xAI Grok, Google Gemini, and Meta LLaMA. Oracle's new database and AI data platform, along with the latest version of Oracle Applications, enable all these AI models to perform multi-step reasoning on your database and application data while keeping that data private and secure.

All of our database and application customers want to do this because it gives them a unified view of all their data for the first time. AI models can respond to a single query by reasoning across all your databases and all your applications. By treating all data holistically, the combination of AI models with Oracle AI Database and AI Data Platform breaks down the barriers of siloed and fragmented data.

The Oracle AI Data Platform makes all your data—every piece of data—accessible to AI models, not just the data in Oracle Database and Oracle Applications, but also data from other databases. Data from cloud storage from any cloud, and even data from your own custom applications, can be accessed by AI models using the Oracle AI Data Platform. With our AI Data Platform, you can unify all data and perform reasoning on all data using the latest AI models. This is the key to unlocking all the value in all your data. Soon, through the lens of AI, you will be able to see everything happening in your business in real-time. Mike, over to you.

Mike Sicilia:

Thank you, Larry. As Doug shared, total revenue grew by 13% at constant currency. I think it's worth noting that this marks three consecutive quarters of double-digit growth in total revenue. So, this is a solid quarter, and we see better days ahead. Let me break down some numbers further, all of which I will explain at constant currency.

Cloud application revenue grew by 11%, bringing our annualized run rate to approximately $16 billion. Among them, Fusion ERP grew by 17%. Fusion SCM grew by 18%. Fusion HCM grew by 14%. NetSuite grew by 13%. Fusion CX grew by 12%. In our industry cloud, specifically, the hotel, construction, retail, banking, catering, local government, and telecommunications sectors all together grew by 21% this quarter. So, it's a high growth rate on a large base. In our healthcare business, we now have 274 clients running their clinical AI agents in production in real-time, and this number is increasing daily. Similarly, in the healthcare sector, our brand new AI-based outpatient EHR has been fully launched and received approval from U.S. regulators. Finally, in healthcare, we expect our bookings and revenue to accelerate significantly in the third quarter.

Overall, cloud applications grew by 11% on a larger base. This is very meaningful because we believe this business will continue to accelerate. While achieving this growth, we also undertook significant sales team restructuring in many regions around the world. This is something we've been talking about for years, the synergy between our back-office applications and industry applications. We are seeing more and more transactions where industry applications drive Fusion, or Fusion applications drive industry applications. With more and more transactions, we are also seeing larger deals that include more components.

Therefore, recently we merged the industry application sales team and the Fusion sales team into a single sales organization. This enables our salespeople to have more strategic one-on-one conversations with customers to sell higher-end products and sell more. Then, when you consider our very large on-premise application customer base, these strategic conversations are driving upgrades. You've heard us say before that simply moving customers to the cloud can bring a 3 to 5 times annual revenue uplift compared to support revenue.

Now, on top of that, in addition to the sales team, the single go-to-market motion, and industry suites, think about combining our AI data platform with this unparalleled suite of applications. This creates an extremely unique opportunity for our customers to quickly derive value from enterprise-level AI. This combination allows customers to integrate industry-leading foundational models with company-specific proprietary data, much of which comes from Oracle applications, as Larry mentioned. Of course, the AI data platform also incorporates non-Oracle applications, competitive data sources like MongoDB or Snowflake, object storage, and even fully customized unstructured data. So, we believe this allows our customers to easily build enterprise data lakes, AI agents, and leverage built-in rather than bolted-on AI to transform their business applications

So to repeat, AI is certainly a great OCI play, but for Oracle, it is also a broader software play. It is also driving the growth of our applications and database business. Let me highlight a few key victories.

In the telecommunications industry, Digital Bridge Holdings chose ERP and SCM, Salaam Telecom chose SCM, and Motorola Solutions chose ERP, SCM, and CX. Tim Brazil, as the 5G leader in Brazil, has just signed a new five-year extension agreement to accelerate AI adoption and transform customer experience at scale, all built on OCI. In fact, this five-year deal is an extension of the partnership that began in 2021 when Tim Brazil migrated all data centers to OCI. So they are now building AI agents to support real customer interactions, including content agents that can automatically compare customer bills across months and explain discrepancies. In the pilot, the AI agents built on OCI have already improved problem resolution speed by 18%, and further improvements are expected as the rollout continues. They have 24 projects underway, with 7 already in production. In just a few months, 6 more are set to launch, all enabled by a multi-cloud architecture, with Oracle as the key AI infrastructure partner. Due to this initial rollout, customer satisfaction has increased by 16%, call center processes are managed end-to-end with 90% accuracy, resulting in a 30% reduction in customer service time and a 15% decrease in network failure interventions, all using predictive analytics, and once again, it is the AI co-pilot built into OCI. So we are realizing a personalized AI engine nationwide for Tim Brazil.

In the financial services sector, Core Civic chose ERP, SCM, and HCM, PrimeLife Technologies chose ERP, and Mutual Insurance chose ERP. In the public sector, the city of Cosa Mesa chose ERP, SCM, and HCM, the U.S. Space Force chose ERP and HCM, and the city of Santa Ana chose ERP, SCM, and HCM. In the high-tech industry, Solar Edge Technologies chose ERP and SCM, Zscaler chose ERP, and Dropbox chose ERP and SCM.

I could go on about these victories, but I think this gives you an understanding of the number of multi-pillar victories and why it is so important for us to provide so many different options for customers both in the back end and front end. In terms of go-lives, we are set to have 330 cloud application customers go live this quarter. That’s multiple go-lives every day. Virgin Atlantic went live with Fusion ERP, HCM, and payroll systems in September. Broadridge has just recently relaunched our Fusion ERP and EPM go-live. LifePoint Health has just completed their third wave of Fusion ERP, SCM, and HCM go-lives Saudi Telecom has launched Fusion SCM, with ERP and HCM following suit. DocuSign is now live with Fusion Data Intelligence. Similarly, I could go on about the launches; there were 330 in the quarter, which gives you an idea that we have had some truly significant launches recently.

Cloud application deferred revenue grew by 14%. This is higher than the 11% growth in cloud application revenue, which just reinforces my earlier statement that we expect application growth to continue to accelerate. So, this is a broadly solid quarter. We are in the later stages of a sales reorganization focused on unified selling across the application portfolio. We are seeing a clear AI halo effect in cloud applications, which is driving upgrades. Our AI data platform combined with our applications is absolutely a game-changer, bringing the Oracle database and all of our applications to the center of the modern agentic enterprise. Looking ahead, we are executing well on a large and growing pipeline, and I expect revenue and earnings to accelerate on a larger base.

Ken, over to you.

Ken Bond (Head of Investor Relations):

Thank you, Mike. Tiffany, if you could, please let the audience ask questions.

Q&A Session

Operator:

(Operator instructions) Your first question comes from Brad Zelnick of Deutsche Bank. Please go ahead.

Brad Zelnick (Analyst):

Thank you. Congratulations to you all, especially to Mahesh and the team for building a significant amount of capacity this quarter. My question is for Clay, and perhaps Doug as well. Oracle is clearly the preferred destination for the most mature AI customers, but this is obviously a capital-intensive proposition far beyond anything Oracle has done before. Specifically, how much capital does Oracle need to raise to fund its future AI growth plans? Thank you.

Clay Magouyrk (CEO):

Thank you for your question, Brad. I’m Clay. I’ll answer this in two parts. First, let me explain why it’s difficult to answer this question precisely. I think one point that many people don’t understand is that we actually have a lot of different options in how we deliver this capacity to customers.

There’s obviously the way that people typically think about it, which is that we pre-purchase all the hardware. As I mentioned at the financial analyst meeting, we actually don’t incur any costs for this until these large data centers are operational.

Then the question becomes, how do you pay for the stuff that goes into the data centers, and what does the cash flow look like? Well, we’ve been exploring some other interesting models. One of them is customers can actually bring their own chips. In these models, Oracle clearly does not need to incur any capital expenditures upfront for this.

Similarly, we are exploring different models with various suppliers, some suppliers are actually very interested in adopting a leasing capacity model rather than a selling capacity model. You can imagine that this brings different cash flow impacts, which is beneficial and reduces Oracle's overall borrowing needs and required capital.

So you can imagine that as we look at all these commitments, we will use a range of various methods to minimize overall capital costs, and of course, in certain cases, we will raise our own funds. As part of this, I think everyone must understand that we are committed to maintaining our investment-grade debt rating.

So now to give you some more specific information, what I want to say is that we have been reading a lot of analyst reports, and we have read several reports indicating that Oracle is expected to need up to $100 billion to complete the construction. Based on what we currently see, the amount of financing we will need to fund this construction will be less than, if not “substantially less” than that amount. I hope this helps answer your question, Brad.

Brad Zelnick (Analyst):

Very helpful. Thank you. Thanks for taking the questions.

Operator:

Your next question comes from Ben of Melius Research. Please go ahead.

Ben (Analyst):

Hey, guys. Thank you very much. It's great to talk to you. Given the answer to that question, the path of OCI margins seems very important for improving EBITDA and cash flow. So at the analyst meeting, you mentioned that during the customer contract period, OCI's AI workload margins would be in the range of 30% to 40%. I guess my question is, how long will it take for all your OCI data centers to reach that level of AI margins, and what needs to happen to achieve that?

Doug Kehring (CFO):

Yes. Thank you for the question, Ben. The answer really depends on the situation. The good news is, as I mentioned earlier, we actually won't incur any costs until the data centers are built and operational. Then we have highly optimized the process of input capacity and handing it over to customers, which means that the time period in which we incur costs without that kind of revenue and the gross margin profile we talked about is actually only a few months. So in that case, that time period is not significant. A few months is not a long time.

What is actually more important is the overall mix of our online data centers and how they are growing relative to our total global expansion. So I think as we go through this construction phase, we are currently in a very rapid construction phase, and most of the capacity is not online yet, clearly, the overall blended margin will be lower. But when we actually get most of this capacity online—that is really our focus. The best way to quickly improve margins is to actually deliver capacity faster. This will ultimately ensure that we reach the 30% to 40% gross margin profile across all AI data centers very quickly

Ben:

Thank you.

Operator:

Your next question comes from Tyler Radke of Citibank. Please go ahead.

Tyler Radke (Analyst):

Yes, thank you for taking my question. This question is for Larry or Clay. Oracle has clearly established itself as a leading AI infrastructure provider for AI labs and, in some cases, enterprise customers. How do you view the opportunities for selling additional platform services, such as databases, middleware, and other parts of the portfolio, similar to how we saw cloud providers adding these services early on in the public cloud space? What similarities or differences do you see between the cloud platform as a service market and the emerging AI platform as a service market? Thank you.

Clay Magouyrk (CEO):

Let me start with traditional cloud and traditional Oracle databases. I think the biggest change we made there is making our databases available in everyone’s cloud. So you can buy Oracle databases on Google or Amazon, and also on Microsoft Azure and OCI. So that’s the first step—maybe the first action we took, which we call multi-cloud, where we actually embed OCI data centers into other clouds. So they can get the latest and greatest version of Oracle databases.

The second thing we did is we actually transformed the Oracle database or added functionality to the Oracle database to allow you to vectorize, which actually points to—and vectorize all your data, whether it’s in object storage across different clouds, whether it’s in custom applications, or whether it’s in another database. It actually takes your data universe, catalogs that data, vectorizes it, and allows LLMs (large language models) to reason over all that data.

Now, what’s really extraordinary about this is, think about just asking a query, asking a question, and the model will look at all your data. Typically, when you ask a question, you have to point it to this database or that application. You can’t say, “Look, I just want to know who my next customer to sell to is.” I’m a salesperson in the region. I want to look at all the accounts in my region, and I want to see who my best potential customers are in my region. This usually means looking at contract data, looking at public data, looking at our sales systems, support systems, all these disparate systems. Well, suddenly, all this data is unified. We take all your data and unify it. So you can ask a single question, and the AI model can find the answer to that question, no matter where it is stored. This is really a unique proposition, and we believe this will greatly enhance the use of our databases and our cloud.

Tyler Radke:

Thank you.

Operator:

Your next question comes from Brent Thill of Jefferies. Please go ahead.

Brent Thill (Analyst):

Thank you. A question for Larry and Clay regarding the fungibility of your infrastructure. If a larger customer is unable to pay, what do you need to do to transfer the data center from one customer to another?

Clay Magouyrk (CEO):

Yes. Thank you, Brent. So I think the first thing to understand is that the AI infrastructure we deliver is exactly the same cloud that we deliver to all customers. We made specific choices around bare metal virtualization and how we do things like hardware security erasure from the outset of OCI.

So the reason I bring this up is that, for anyone who currently holds a credit card, they can come and register for any of the hundreds of regions I mentioned earlier, and you can spin up a bare metal machine in a matter of minutes. At the end of that, you can shut it down, I will reclaim it, and I can hand it over to the next customer in less than an hour.

So when you ask how long it takes to transfer capacity from one customer to another, that is measured in hours. In fact, I think one implication of this question is, how long does it take for the customer to adopt it? Fortunately, we have a lot of experience in getting over 700 AI customers on our platform, including the vast majority of large model providers already running on OCI. When we give them capacity, they typically can use that capacity within two to three days.

So when I think about how long it takes for me to take away capacity and hand it to a customer, it’s not a laborious process, it’s not a unique process. One other thing I want to say is that I think many people are not aware of this aspect of our cloud, which has actually been happening. So we have many customers who may register for thousands of some type of GPU, and then they come back and say, actually, I want to get more capacity elsewhere, can you reclaim this? We do this all day long, every day, we are constantly moving customers and increasing total net capacity.

So we have the technology, we have the secure infrastructure to do this, and we also have a large customer base with demand, so whenever we find ourselves with unused capacity, it gets allocated and configured quickly.

Brent Thill (Analyst):

That’s very helpful. I appreciate it.

Operator:

Your next question comes from Mark Moerdler of Sanford Bernstein. Please go ahead.

Mark L. Moerdler (Analyst):

Thank you very much for accepting my question, and congratulations on this quarter. Doug, you provided some information earlier tonight that I would like to delve into. Clay presented a slide at the financial analyst meeting where he showed the revenue and expenses of a single data center. Doug and Clay, can you talk about the cash flow of the same data center, starting from the commitments to the data center, then the hardware, and how it flows into becoming positive cash flow? And how does this aggregate across multiple data centers? I would really appreciate it if you could provide some color on that.

Larry Ellison (Co-founder, Chairman, and CTO):

Of course. Happy to answer, Mark. As we discussed earlier in this call, it starts with the actual data center itself and the accompanying power capacity. The way we build it is that we do not incur any cash expenditures until the data center is fully delivered, configured, and fit for purpose.

So it really comes down to what the cash flow looks like for the capacity going into the data center. As I mentioned earlier, it really depends on the exact business model and financial model we use to procure that capacity. In some cases, customers actually want to bring their own hardware, in which case we have no capital expenditures, and it really revolves around the data center itself, possibly some networking equipment, and labor costs.

We have other models where vendors want to lease that capacity, in which case the rent payments start when the capacity is configured for the customer. So customer cash flows in, and we then take that cash flow and push it to all the different vendors.

Obviously, you also have a model where Oracle takes its own cash, pays for the hardware upfront, and then puts in the capacity. That is obviously the most cash-intensive upfront. Then there is a depreciation schedule over the next few years.

So it really depends on the exact business and financial model used for each data center. Then you asked how they stack together? Well, fortunately, we don’t need calculus to solve this. Basic arithmetic is sufficient because they actually just add up. So if you have a timeline for one data center and a timeline for a second data center, the cash flows add together. Obviously, if one data center comes in earlier, you will have expenses and revenues coming in earlier. If a data center moves out, then the expenses and revenues move out as well.

Mark L. Moerdler (Analyst):

Very helpful. I appreciate it.

Operator:

Your last question comes from John Don DiFucci of Guggenheim Securities. Please go ahead.

John DiFucci (Analyst):

Okay, I’m not forcing you to kiss my ring. Actually, I’m John DiFucci. Anyway, sorry. Listen, a lot of questions about infrastructure have already been asked, and they are very important questions because that is a significant part of your growth But I have a question about the application business. Mike, you mentioned that applications will accelerate this year. Why do you have confidence in this business when all your SaaS peers are seeing the opposite, with growth slowing down? Especially since we had similar thoughts about Oracle's application business last year, but we didn't really start seeing it until the fourth quarter.

We have heard some things about "One Oracle" in the field, your listing actions, and how applications and infrastructure are more integrated rather than separated. You also talked about combining vertical and horizontal application teams here. Is that the case? Is it mainly the listing actions? Are there any products or other aspects we should consider? Thank you.

Mike Sicilia (Co-CEO, responsible for applications):

Well, yes, thank you for your question. First, I think it's a combination of several things. But let me start with what I believe is happening in the industry. All of our competitors are largely in the "best-of-breed" business because they are not in the overall application business. They are not in the backend business, they are not in the industry business, and they are not in everything in between. They are not in the front office, backend, or middle office. We are the only application company in the world that sells a complete suite of applications.

Then you add in the baked-in AI, the halo effect of AI that is directly built into our applications. So we already have over 400 AI features live in Fusion. I mentioned that 274 customers are live on our clinical AI agents. However, the rollout of these clinical AI agents is, for us, Uberator SaaS applications, measured in weeks. So you look at industries like healthcare, doing anything of this scale used to take months or years, and now it only takes weeks—by the way, John, the customers are completely implementing these things themselves, right? They don’t need our help. You just roll them out, and they work.

So we are in the application suite business. We are building AI into our backend applications, our frontend applications. We are building applications that are themselves AI agents. That’s why you see our industry application growth rate at 21% for this quarter. Our Fusion ERP grew 17%, SCM grew 18%, HCM grew 14%, CX grew 12%, and again, all on a larger base.

Then I think the next part of this is that you add in the AI data platform. So if you want an industry application suite, and then you want to create your own AI agents. You want to create and unlock your own enterprise data on top of that, we are the only ones—providing all these elements to customers. I think when you see customers getting tired of spending on "best-of-breed" because the integration costs are too high, and it’s difficult to bolt AI onto all those things because you are not actually retiring anything in the process, we are in a very unique position. I think we are also starting to see this in the numbers, John, with application deferred revenue now growing at 14%, faster than the 11% revenue growth within the quarter So for all these reasons, I am optimistic about the future of our application business. It is the growth engine for Oracle.

John DiFucci (Analyst):

Thank you, Mike. When you were speaking, I slightly clarified in my mind that when I think of "an Oracle," I used to think—when I first heard about it, I thought it was a public listing move, but it is more than that. It is actually also a—it's also a product aspect. It is all of it. So thank you very much.

Mike Sicilia:

Thank you.

Ken Bond:

Thank you, John. A replay of this conference call will be available on our investor relations website for 24 hours. Thank you all for joining us today. That’s it, and I will now hand the call back to Tiffany to conclude.

Operator:

Ladies and gentlemen, this concludes today's conference. Thank you for your participation. You may now disconnect.

---

This transcript may not be 100% accurate and may contain spelling errors and other inaccuracies