Broadcom fell 5% after the conference call, with $73 billion in AI orders deemed insufficient, and the CEO admitted not to overestimate custom chips

Broadcom stated that it currently has an AI product order backlog of $73 billion, which will be delivered over the next six quarters. This figure disappointed some investors, although Chen Fuyang later clarified that this number is a "minimum value" and expects more orders to come in. However, after the earnings call, the stock price turned down, with a decline of more than 5%. Regarding the market debate on "customers developing chips independently," Chen Fuyang stated that this is an "exaggerated assumption."

Broadcom's AI market sales outlook failed to meet investors' high expectations, leading to a decline in stock price after hours.

On Thursday, Broadcom CEO Hock Tan stated during a conference call that the company currently has $73 billion in AI product backlog, which will be delivered over the next six quarters. This figure disappointed some investors, although Hock Tan later clarified that this number is the "minimum" and expects more orders to come in.

Hock Tan stated:

This is just the data as of now; do not view the $73 billion as the total revenue we will deliver over the next 18 months. We are simply saying we have this many orders now, and the booking volume has been accelerating.

Regarding the market debate on "customers developing their own chips," Hock Tan stated that this is a "greatly exaggerated assumption," pointing out:

As silicon technology continues to evolve, large language model developers need to weigh resource allocation, especially when competing with commercial GPUs. Custom chip development is a time-consuming task, and there is no evidence that customers will fully shift to self-development.

He emphasized that in custom-designed, hardware-driven XPUs, performance can be greatly enhanced, but noted that the development speed of commercial GPUs shows no signs of slowing down.

Hock Tan revealed that the company secured an $11 billion order from AI startup Anthropic PBC in the fourth quarter . This order is an addition to the $10 billion order from the third quarter. He added that Broadcom also signed another customer order worth $1 billion but did not disclose the customer's identity.

Key points from the conference call:

Record-breaking performance: In fiscal year 2025, the company's total revenue grew by 24% year-on-year, reaching a record $64 billion, primarily driven by AI, semiconductor, and VMware businesses. Among them, AI business revenue grew by 65% year-on-year, reaching $20 billion.

Explosive growth in AI business orders: As of now, the company holds a total backlog of $73 billion related to AI, which is expected to be delivered over the next 18 months. This order accounts for nearly half of the company's total backlog (which is $162 billion).

Strong prospects for AI business: The company expects that in the first quarter of fiscal year 2026, AI business revenue will double year-on-year, reaching $8.2 billion. Management believes that the growth momentum of the AI business will continue to accelerate in 2026.

Expansion of custom AI chip (XPU) business: The company successfully won its fifth XPU customer and secured a $1 billion order. At the same time, existing customer Anthropic also added a large order of $11 billion, demonstrating the market's high recognition of the company's custom AI accelerator solutions

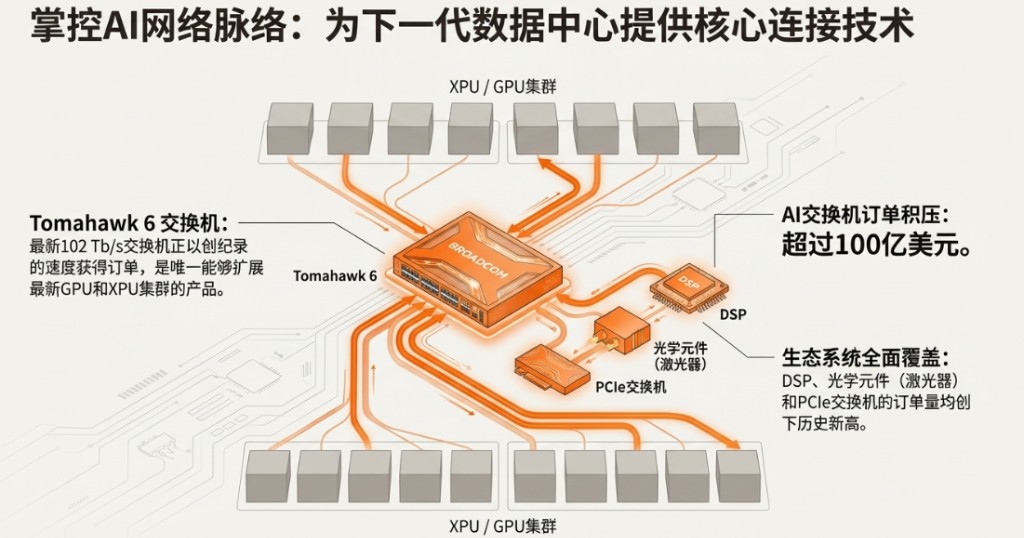

Strong Demand for AI Network Products: Customers are accelerating the deployment of data center infrastructure, with particularly strong demand for AI switches, and related backlog orders have exceeded $10 billion.

Generous Shareholder Returns: The company announced a 10% increase in quarterly dividends to $0.65 per share and extended its stock repurchase program.

According to Wallstreetcn, Broadcom's fourth-quarter revenue and profit both hit record highs, with AI chip revenue accelerating by 74%. After hours, Broadcom's stock price initially surged by 4%, but later declined after the earnings call, with a drop of over 5%.

(After-hours stock price rose and then fell)

(After-hours stock price rose and then fell)

Expansion of Custom AI Chip Business Landscape

Broadcom stated that significant breakthroughs have been made in the performance of custom AI chips.

Following the acquisition of an additional $11 billion order from customers in the fourth quarter, the company announced this quarter that it has won its fifth custom AI chip (XPU) customer, with this $1 billion order set to be delivered by the end of 2026. Chen Fuyang stated:

These XPUs are not only used for customers' internal workloads but are also being extended to external markets.

Google's TPU business has become the best case. According to Chen Fuyang, Google's TPU used to create Gemini is being utilized by companies such as Apple, Coherent, and SSI for AI cloud computing. The scale of this commercialization model could be "very huge." Chen Fuyang emphasized:

The TPU primarily replaces commercial GPUs, rather than other custom chips, as investing in custom accelerators is a multi-year strategic decision, not a short-term trading move.

Regarding the market debate on "customer self-development," Chen Fuyang stated that this is an "exaggerated assumption." He pointed out:

As silicon technology continues to evolve, large language model developers need to weigh resource allocation, especially when competing with commercial GPUs. Custom chip development is a time-consuming task, and there is no evidence that customers will fully shift to self-development.

Explosion in Demand for AI Network Infrastructure

Broadcom's AI network business is experiencing strong growth momentum.

The company's latest 102 Tbps Tomahawk 6 switch has backlog orders exceeding $10 billion. Chen Fuyang stated:

This is currently the first and only product on the market that reaches this performance level, which is essential for expanding the latest GPU and XPU clusters.

In addition to switches, the company has also set historical highs in orders for optical components such as DSPs and lasers, as well as PCIe switches. According to Chen Fuyang, among the $73 billion in AI backlog orders, about $20 billion comes from components other than XPUs

He emphasized that customers will build data center infrastructure in advance before deploying AI accelerators, which has driven the advanced demand for network equipment.

Regarding silicon photonics technology, Chen Fuyang stated that the company has the technological reserves and is continuously developing silicon photonic switches and interconnect products with a bandwidth of 1.6 Tbps. However, he believes the market is not yet ready for full deployment, and engineers are still trying to extend the application cycle of copper wires and pluggable optical modules as much as possible.

Chen Fuyang stated:

When you can't do well with pluggable optical modules, you will turn to silicon photonics technology, but this won't happen quickly.

Transformation of System-Level Solution Providers

Broadcom is transforming from a standalone chip supplier to a system-level solution provider, selling overall rack systems to its fourth customer instead of individual chips.

Chen Fuyang stated that given that AI data centers involve numerous components, packaging and selling the overall system and being responsible for overall performance "is starting to make sense."

This shift will impact the company's gross margin performance. Chief Financial Officer Kirsten Spears (CFO and Chief Accounting Officer) pointed out:

System sales mean higher costs for non-self-produced components, leading to a decline in gross margin.

The company expects the consolidated gross margin for the first quarter of fiscal year 2026 to decline by approximately 100 basis points quarter-over-quarter, primarily reflecting the impact of the increased proportion of AI revenue.

However, management emphasized that the operating profit margin will not decline in tandem. Chen Fuyang stated that the growth rate of the AI business is fast enough for the company to gain operational leverage on operating expenses, keeping the profit contribution from operating profit margin at a high growth rate. Spears added:

Although the gross margin will decline, both gross profit and operating profit will increase.

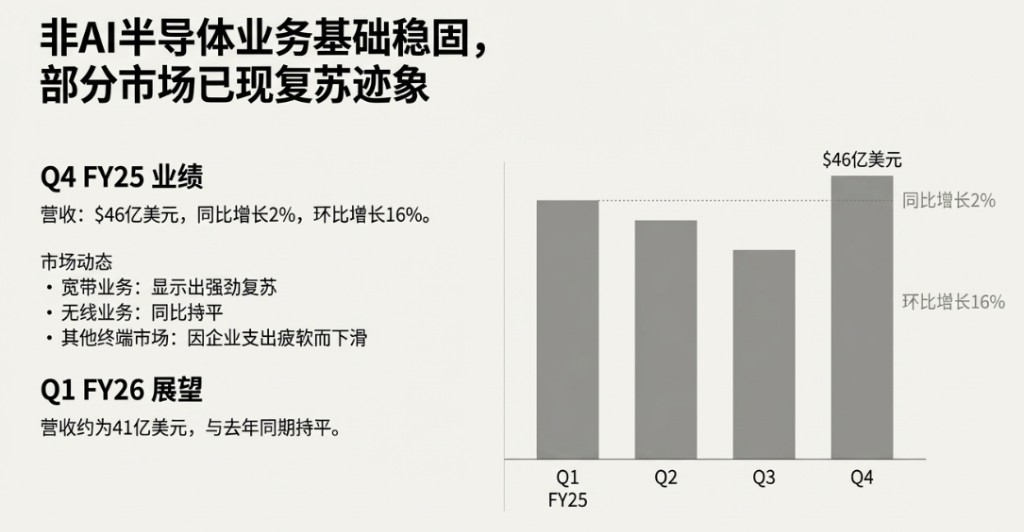

Non-AI Business Awaiting Recovery Signals

Non-AI semiconductor revenue for the fourth quarter was $4.6 billion, a year-on-year increase of 2% and a quarter-over-quarter increase of 16%, mainly benefiting from seasonal advantages in the wireless business.

In terms of year-on-year comparison, the broadband business shows solid recovery, the wireless business remains flat, but other terminal markets have declined, and corporate spending has not shown obvious signs of recovery. Chen Fuyang stated:

The company sees very good recovery in the broadband sector, but other businesses remain stable.

He speculated that AI may have siphoned off spending from other areas of enterprises and other fields of large-scale data centers. Management believes it will take one to two more quarters to see a sustainable recovery, but demand has not further deteriorated.

The company forecasts that non-AI semiconductor revenue for the first quarter of fiscal year 2026 will be approximately $4.1 billion, flat compared to the same period last year, due to seasonal factors in the wireless business For the full fiscal year 2026, management expects AI revenue to continue accelerating and become a major growth driver, while non-AI semiconductor revenue will remain stable, and infrastructure software revenue will grow in the low double digits.

Transcript of Broadcom's Q4 2025 fiscal year earnings call, translated as follows (assisted by AI tools):

Meeting Name: Broadcom Q4 2025 Fiscal Year Earnings Call Minutes

Meeting Time: December 11, 2025

Host

Welcome to Broadcom's Q4 and full year 2025 financial performance earnings call. Now, I will hand the meeting over to Broadcom's Head of Investor Relations, Ji Yoo, for the opening remarks.

Ji Yoo (Head of Investor Relations)

Thank you, Strey, and good afternoon, everyone. Joining me today are President and CEO Chen Fuyang, Chief Financial Officer Kirsten Spears, and President of the Semiconductor Solutions Division Charlie Kawwas.

Broadcom released a press release and financial statements after today's market close, detailing our financial performance for Q4 and the full year of fiscal 2025. If you did not receive it, you can find it in the "Investors" section of Broadcom's official website. This earnings call is being webcast live, and an audio replay of the meeting will be available in the "Investors" section of Broadcom's official website for one year.

In the upcoming prepared remarks, Chen Fuyang and Kirsten will provide detailed insights into our Q4 fiscal 2025 performance, guidance for Q1 fiscal 2026, and comments on the current business environment. After the prepared remarks, we will enter the Q&A session. Please refer to the press release we issued today and the recent filings submitted to the U.S. Securities and Exchange Commission (SEC) for specific risk factors that could cause actual results to differ materially from the forward-looking statements made during this call.

In addition to U.S. Generally Accepted Accounting Principles (GAAP), Broadcom also reports certain non-GAAP financial metrics. A reconciliation table between GAAP and non-GAAP metrics has been included in the attachment to today's press release. Comments during today's meeting will primarily be based on our non-GAAP financial results.

Now, I will turn the time over to Chen Fuyang.

Chen Fuyang (CEO)

Thank you, Ji, and thank you all for joining our meeting today. We have just concluded Q4 of fiscal 2025, and before discussing that quarter in detail, let me first summarize the full year.

In fiscal 2025, the company's consolidated revenue grew by 24% year-over-year to a record $64 billion, primarily driven by AI semiconductors and VMware business. AI business revenue grew by 65% year-over-year to $20 billion, driving the company's semiconductor business revenue to a record $37 billion for the full year

In our infrastructure software business, the strong adoption of VMware Cloud Foundation (which we call VCF) drove a 26% year-over-year revenue growth, reaching $27 billion. Overall, 2025 is shaping up to be another strong year for Broadcom, as we see customer spending momentum in the AI sector continuing to accelerate into 2026.

Now, let's look at the performance for the fourth quarter of 2025. Total revenue reached a record $18 billion, a 28% year-over-year increase, exceeding our guidance, thanks to better-than-expected growth in AI semiconductors and infrastructure software business. Fourth-quarter consolidated adjusted EBITDA reached a record $12.12 billion, a 34% year-over-year increase.

Next, I will detail the performance of our two major business segments. In the semiconductor business, revenue was $11.1 billion, with year-over-year growth accelerating to 35%. This strong growth was driven by AI semiconductor revenue, which was $6.5 billion, a 74% year-over-year increase. This represents a growth trajectory that has exceeded tenfold over the 11 quarters we have reported this business line.

Our custom accelerator business more than doubled year-over-year, as we see customers increasingly adopting our custom chips, which we call XPU, for training their large language models (LLM) and commercializing platforms through inference APIs and applications.

I would like to add that these XPUs are not only used by our customers for training and inference of their internal workloads. In some cases, the same experience is also being externally extended to other LLM peers, with Google being a prime example, as its TPUs used to create Gemini are also utilized by companies like Apple, Coherent, and SSI for AI cloud computing. We see the potential scale of this happening could be enormous.

As you know, last quarter (Q3 FY2025), we received a $10 billion order for the latest TPU Ironwood racks sold to Anthropic, which is the fourth custom customer we mentioned. In this quarter (Q4), we received an additional $11 billion order from the same customer, which will be delivered by the end of 2026. But this does not mean that our other two customers are not using TPUs.

In fact, they are more inclined to take control of their own destiny and continue to advance their journey of creating their own custom AI accelerators (i.e., XPUs) over the years. Today, I am pleased to announce that in this quarter, we secured our fifth XPU customer through a $1 billion order, which will be delivered by the end of 2026.

Now let's talk about the AI networking business. The demand here is even stronger, as we see customers building their data center infrastructure in advance of deploying AI accelerators. Our current backlog for AI switches has exceeded $10 billion, **as our latest 102 Tbps Tomahawk 6 switch—the first and only product on the market with this capability—continues to receive orders at a record pace **

This is just part of our business. We have also received record orders in DSP, lasers, and other optical components, as well as PCIe switches, all of which will be deployed in AI data centers.

All these components combined with our XPU bring our total order backlog to over $73 billion, which is nearly half of Broadcom's $162 billion merger backlog. We expect these $73 billion in AI backlog orders to be delivered within the next 18 months. In the first quarter of fiscal year 2026, we expect AI business revenue to double year-over-year, reaching $8.2 billion.

Looking at the non-AI semiconductor business, fourth-quarter revenue was $4.6 billion, a year-over-year increase of 2% and a quarter-over-quarter increase of 16%, thanks to seasonal benefits in the wireless business. In terms of year-over-year growth, the broadband business showed solid recovery, the wireless business remained flat, while all other end markets experienced declines, as corporate spending has yet to show clear signs of recovery.

Therefore, in the first quarter, we forecast non-AI semiconductor revenue to be approximately $4.1 billion, flat compared to the same period last year, with a quarter-over-quarter decline due to seasonal factors in the wireless business.

Next, I will talk about our infrastructure software division. Fourth-quarter infrastructure software revenue was $6.9 billion, a year-over-year increase of 19%, exceeding our expectation of $6.7 billion. Booking volumes remained strong, with total contract value booked in the fourth quarter exceeding $10.4 billion, compared to $8.2 billion in the same period last year. Our year-end infrastructure software backlog was $73 billion, up from $49 billion in the same period last year. We expect renewals in the first quarter to be seasonal and forecast infrastructure software revenue to be approximately $6.8 billion. However, we still expect low double-digit percentage growth in infrastructure software revenue for fiscal year 2026.

Here is our outlook for 2026. Directionally, we expect AI revenue to continue to accelerate and become a major driver of our growth, while non-AI semiconductor revenue will remain stable. Infrastructure software revenue will continue to be driven by VMware with low double-digit growth rates. For the first quarter of 2026, we expect consolidated revenue to be approximately $19.1 billion, a year-over-year increase of 28%, and we expect adjusted EBITDA to be approximately 67% of revenue.

Next, I will hand it over to Kirsten.

Kirsten Spears (Chief Financial Officer and Chief Accounting Officer)

Thank you, Chen Fuyang. Now I will provide more details on the fourth-quarter financial performance.

Consolidated revenue for the quarter was a record $18 billion, a year-over-year increase of 28%. The gross margin for the quarter was 77.9% of revenue, better than our initial guidance, thanks to higher software revenue and the product mix within semiconductors. Consolidated operating expenses were $2.1 billion, of which $1.5 billion was for R&D expenses. Fourth-quarter operating income was a record $11.9 billion, a year-over-year increase of 35%

From a month-on-month perspective, although the gross margin decreased by 50 basis points due to the semiconductor product mix, the operating profit margin increased by 70 basis points to 66.2% thanks to good operating leverage. The adjusted EBITDA was $12.12 billion, accounting for 68% of revenue, exceeding our guidance of 67%. This figure does not include $148 million in depreciation.

Now let's review the profit and loss situation of our two business segments, starting with the semiconductor business. Our semiconductor solutions division achieved record revenue of $11.1 billion, with year-on-year growth accelerating to 35% driven by AI. In this quarter, semiconductor revenue accounted for 61% of total revenue. The gross margin for the semiconductor solutions division was approximately 68%. Due to increased investment in cutting-edge AI semiconductor R&D, operating expenses grew by 16% year-on-year to $1.1 billion. The operating profit margin for the semiconductor business was 59%, an increase of 250 basis points year-on-year.

Next, let's look at the infrastructure software business. Infrastructure software revenue was $6.9 billion, a year-on-year increase of 19%, accounting for 39% of total revenue. The gross margin for infrastructure software this quarter was 93%, compared to 91% in the same period last year. Operating expenses for this quarter were $1.1 billion, resulting in an operating profit margin of 78% for infrastructure software. In contrast, the operating profit margin in the same period last year was 72%, reflecting the completion of VMware's integration work.

Next is cash flow. This quarter's free cash flow was $7.5 billion, accounting for 41% of revenue. Our capital expenditures were $237 million. The accounts receivable turnover days for the fourth quarter were 36 days, compared to 29 days in the same period last year. Our inventory at the end of the fourth quarter was $2.3 billion, a month-on-month increase of 4%. The inventory turnover days for the fourth quarter were 58 days, compared to 66 days in the third quarter, indicating that we continue to manage the inventory across the ecosystem strictly. We held $16.2 billion in cash at the end of the fourth quarter, an increase of $5.5 billion month-on-month due to strong cash flow generation. Our total fixed-rate debt of $67.1 billion has a weighted average coupon rate of 4% and a maturity of 7.2 years.

Regarding capital allocation. In the fourth quarter, we paid $2.8 billion in cash dividends to shareholders based on a quarterly common stock cash dividend of $0.59 per share. In the first quarter, we expect approximately 4.97 billion diluted shares on a non-GAAP basis, excluding any potential impact from stock buybacks.

Now let me summarize our financial performance for fiscal year 2025. Our revenue reached a record $63.9 billion, with organic growth accelerating to a year-on-year increase of 24%. Semiconductor revenue was $36.9 billion, a year-on-year increase of 22%. Infrastructure software revenue was $27 billion, a year-on-year increase of 26%. The adjusted EBITDA for fiscal year 2025 was $43 billion, accounting for 67% of revenue. Free cash flow increased by 39% year-on-year to $26.9 billion.

In fiscal year 2025, we returned $17.5 billion in cash to shareholders through $11.1 billion in dividends and $6.4 billion in stock buybacks and cancellations. Consistent with our ability to generate more cash flow than the previous year, we announced an increase in the quarterly common stock cash dividend to $0.65 per share in the first quarter of fiscal year 2026, a 10% increase from the previous quarter We plan to maintain this target quarterly dividend throughout the 2026 fiscal year, but this requires approval from the board of directors each quarter.

This means that our annual common stock dividend for the 2026 fiscal year will reach a record $2.60 per share, an increase of 10% year-over-year. I want to emphasize that this marks the 15th consecutive increase in the annual dividend since we began paying dividends in the fiscal year 2011. The board has also approved an extension of our stock repurchase program, with a remaining quota of $7.5 billion that will last until the end of the 2026 calendar year.

Finally, regarding our guidance. Our guidance for the first quarter is consolidated revenue of $19.1 billion, an increase of 28% year-over-year. We forecast semiconductor revenue of approximately $12.3 billion, an increase of 50% year-over-year. Among this, we expect AI semiconductor revenue in the first quarter to be $8.2 billion, an increase of approximately 100% year-over-year. We expect infrastructure software revenue to be approximately $6.8 billion, an increase of 2% year-over-year.

To assist you in building your models, we expect the consolidated gross margin for the first quarter to decline by approximately 100 basis points quarter-over-quarter, primarily reflecting the increased share of AI revenue. Just a reminder, the full-year consolidated gross margin will be influenced by the mix of infrastructure software and semiconductor revenue as well as the internal product mix of semiconductors.

We expect adjusted EBITDA for the first quarter to be approximately 67%. We anticipate that the non-GAAP tax rate for the first quarter and the 2026 fiscal year will rise from 14% to approximately 16.5%, due to the impact of the global minimum tax and changes in the geographic mix of revenue compared to the 2025 fiscal year.

My prepared remarks conclude here. Moderator, please open the Q&A session.

Q&A Session

Moderator

Thank you. (Operator instructions) Our first question comes from Vivek Arya of Bank of America.

Q: Vivek Arya

Thank you. Chen Fuyang, I want to clarify, you mentioned $73 billion in orders for the AI business over the next 18 months, does this mean that AI revenue for the 2026 fiscal year will be around $50 billion? I want to confirm if my understanding is correct. Then the main question is, Chen Fuyang, there is now a debate about "customer-owned tooling," meaning your ASIC customers may want to do more themselves. How do you see the evolution of your XPU content and share with your largest customers over the next one to two years? Thank you.

A: Chen Fuyang (CEO)

Okay, to answer your first question, we are saying that is correct. As of now, we indeed have $73 billion in backlog orders, including XPUs, switches, DSPs, and lasers for AI data centers, which we expect to ship within the next 18 months.

**Clearly, this is just the data as of now. We fully expect more orders to come in during this period. So, do not view the $73 billion as the total revenue we will ship in the next 18 months. We are just saying we have this many orders now, and the booking volume has been accelerating. Frankly, the booking growth we are seeing is not limited to XPUs, but also includes switches, DSPs, and all other components used for AI data centers **

The booking situation we have seen over the past three months is unprecedented. Particularly with the Tomahawk 6 switch, it is one of the fastest-deployed products among all the switch products we have launched. This is very interesting, partly because it is currently the only product on the market that achieves 102 Tbps performance, which is essential for scaling the latest GPU and XPU clusters.

As for your broader question about the future of XPU, my answer is to not take external claims at face value. This is a gradual process, a journey that spans several years. Many players involved in LLM, although not many, have developed their own custom AI accelerators for good reasons.

If you use a general-purpose GPU, you can only do what can be achieved in software and kernels, not in hardware. In custom-designed, hardware-driven XPUs, performance can be greatly enhanced. We have seen this with TPUs and in all the accelerators we are developing for other customers, performing better in all aspects such as invocation, training, inference, etc.

But does this mean that over time, they all want to do it themselves? Not necessarily. In fact, because silicon technology is constantly updating and evolving. If you are an LLM player, you need to consider where to allocate resources to compete in this field, especially when you ultimately have to compete with commercial GPUs that are not slowing down in their evolution. So I think the concept of "customers having their own tools" is an exaggerated assumption, and frankly, I don't think it will happen.

Host

Our next question comes from Ross Seymore at Deutsche Bank.

Question: Ross Seymore

Hi, thank you for the question. Chen Fuyang, I want to return to a point you mentioned earlier, that TPUs are increasingly being sold to other customers in a commercialized way. Do you see this as a substitution effect for those customers who could have collaborated with you, or is it actually expanding the market? What kind of financial impact do you see from your perspective?

Answer: Chen Fuyang (CEO)

That's a great question, Ross. The most obvious trend we see now is that users of TPUs, their most common alternative choice is commercial GPUs. Because replacing it with another custom chip is a different situation. Investing in custom accelerators is a multi-year journey, a strategic directional decision, rather than necessarily a very transactional or short-term move.

Shifting from GPUs to TPUs is a transactional move. Developing your own AI accelerator is a long-term strategic move, and nothing can stop you from continuing to invest towards the ultimate goal of creating and deploying your own custom AI accelerators for success. That’s the dynamic we see.

Host

The next question comes from JP Morgan's Harlan Sur.

Question: Harlan Sur

Yes, good afternoon. Thank you for the question, and congratulations on your strong performance, guidance, and execution. Chen Fuyang, I just want to confirm again that you mentioned the total AI backlog for the next six quarters (18 months) is $73 billion, correct? This is just a snapshot of your current order book. But considering your delivery cycles, I believe customers can and will place AI orders in the fourth, fifth, and sixth quarters. So over time, the backlog of orders for more shipments in the second half of 2026 may continue to increase, correct? Is this understanding correct? Then, given the strong and growing backlog, the question is whether the team has sufficient supply commitments for 3nm, 2nm wafers, substrates, and HBM to support all the demand on your order book? I know one area you are trying to mitigate this issue is advanced packaging, and you are building a facility in Singapore. Can you remind us which part of the advanced packaging process the team is focusing on at the Singapore facility? Thank you.

Answer: Chen Fuyang (CEO)

Thank you. To answer your first, simpler question, you are correct. You could say that $73 billion is the backlog we currently hold and will ship over the next six quarters. You could also say that, given our delivery cycles, we expect more orders to be absorbed into our backlog and shipped over the next six quarters. So, you can look at it this way: we expect a minimum revenue of $73 billion over the next six quarters, but as more orders come in, we do expect revenue to be higher. Our delivery cycles can vary from six months to a year, depending on the specific product.

Regarding the supply chain issue you mentioned, specifically the key supply chains in silicon and packaging, yes, this is an interesting challenge we have been continuously addressing. With strong demand growth and the need for more innovative packaging technologies, as every custom accelerator is now composed of multiple chips, packaging has become a very interesting and technical challenge. The purpose of building the facility in Singapore is to partially internalize these advanced packaging capabilities. We believe we have enough demand to achieve internalization, which is not only a cost consideration but also for supply chain security and delivery. As you pointed out, we are building a fairly large advanced packaging facility in Singapore purely to address the issues related to advanced packaging.

In terms of silicon, we rely on the same source: TSMC in Taiwan. Therefore, we have been striving for more 2nm and 3nm capacity. So far, we have not encountered any limitations in this regard. But again, as time goes on and our backlog increases, we will need to observe the situation.

Host

The next question comes from Jefferies' Blayne Curtis

Question: Blayne Curtis

Hey, good afternoon. Thank you for the question. I want to ask about the initial $10 billion deal you mentioned regarding the sale of racks (RAC). I would like to know, for the subsequent orders and the fifth customer, could you describe how you will deliver these products? Will it be the delivery of XPU or the entire rack? Then, could you walk us through some of the details, such as what the deliverables are? It’s clear that Google uses its own networking equipment. So I’m also curious, will this completely replicate Google’s approach, or will it include your own networking equipment? Thank you.

Answer: Chen Fuyang (CEO)

This is a very complex question, Blayne. Let me tell you what it is; it is a system sale. We have so many components, far beyond just XPU, that are used in any AI system for hyperscale data centers. Yes, we believe it makes sense to sell it as a system and take responsibility for the entire system. People will understand this better as a system sale. So for this fourth customer, we are selling it as a system that includes our key components. It’s no different from selling chips; we will certify its final operational capability throughout the sales process.

Host

The next question comes from Stacy Rasgon at Bernstein.

Question: Stacy Rasgon

Hi, everyone. Thank you for the question. I want to talk about gross margins, which may relate to the previous question. I understand why the AI business might dilute gross margins to some extent; we have the cost pass-through of HBM, and it can be inferred that as system sales increase, the dilution effect will become more pronounced. You have hinted at this in the past, but I would like to know if you could clarify this more explicitly.

As AI revenue grows and as we start doing system sales, how should we view the gross margin figure, say looking ahead to the next four or six quarters? Will it be in the low 70s? Even at the company level, will it start with a 6? Additionally, I also want to know, I understand that gross margins will decline, but what about operating margins? Do you think you can achieve enough operating leverage on operating expenses to keep operating margins flat, or will operating margins also need to decline?

Answer: Chen Fuyang (CEO)

I’ll have Kirsten provide you with the details, but I can give you a simple macro-level explanation, Stacy. Good question. The phenomenon is that you haven’t seen it impact us yet, even though we have started some system sales. You don’t see this in our numbers, but it will happen. We have publicly stated that the gross margin for AI revenue is lower than other parts of our business, including of course the software business.

However, we expect that as we do more AI business, its growth rate will be so fast that we can achieve operating leverage on operating expenses, allowing the profit contribution from operating margins to still maintain high-level growth. So we expect that even if gross margins start to decline at a macro level, operating leverage will benefit us at the operating margin level

Answer: Kirsten Spears (Chief Financial Officer and Chief Accounting Officer)

I think Chen Fuyang made a very good point. In the second half of this year, as we start to ship more systems, the situation is straightforward. We will pass on more costs of components that are not produced in-house. You can think of it as the situation with XPU, where we have memory on XPU, and we are passing on those costs. In the racks, we will pass on more costs. So those gross margins will be lower. However, overall, as Chen Fuyang mentioned, our gross profit will increase, but the gross margin will decrease. Due to our leverage, operating profit will increase, but the operating profit margin as a percentage of revenue will slightly decline. However, we will provide more specific guidance as we approach the end of the year.

Host

The next question comes from Jim Schneider at Goldman Sachs.

Question: James Schneider

Good afternoon, thank you for the question. I would like to know if you could more precisely calibrate your expectations for AI revenue in fiscal year 2026. I remember you mentioned that the growth for fiscal year 2026 would accelerate compared to the 65% growth rate in fiscal year 2025, and your guidance for the first quarter is a growth of 100%. So I would like to know if the growth rate in the first quarter can be a good starting point for the expected growth rate for the entire year, or will it be slightly lower than that? Additionally, could you clarify separately whether the $1 billion order you received from the fifth customer is the same as the OpenAI announcement you made? Thank you.

Answer: Chen Fuyang (Chief Executive Officer)

Wow, there are a lot of questions here. Let me start with 2026. You know, as I mentioned, our backlog has been very dynamic recently and is continuously increasing. You are correct, six months ago we might have said that AI revenue growth for 2026 could be 60% to 70% year-over-year. We doubled in the first quarter. And today, we say that the first quarter of 2026 will double. When we look at the current situation, because all the orders are continuously pouring in, we give you a milestone today, which is a backlog of $73 billion to be shipped in the next 18 months. As I mentioned in response to previous questions, we fully expect this $73 billion to continue to grow over the next 18 months.

This is a moving target, and the numbers will change over time, but it will grow. I find it difficult to pinpoint exactly what 2026 will look like. So I would rather not give you any guidance, which is also the reason we do not provide full-year guidance, but we do provide guidance for the first quarter. Give us some time, and we will provide guidance for the second quarter. You are right, is this an accelerating trend? My answer is that as we enter 2026, it is likely to be an accelerating trend. I hope this answers your question.

Host

The next question comes from Ben Reitzes at Melius Research.

Question: Benjamin Reitzes

Hey everyone, thank you very much. Chen Fuyang, I would like to ask about the contract with OpenAI, which is expected to start in the second half of this year and continue until 2029, involving a capacity of 10 gigawatts. I guess this is the order from the fifth customer. I just want to know if you still believe this will be a driving force? Are there any obstacles to achieving this goal? When do you expect it to start contributing revenue, and how confident are you about this? Thank you very much, Chen Fuyang.

Answer: Chen Fuyang (CEO)

Did you hear my answer to the previous questioner, Jim? Because I didn't answer that question. I didn't answer it then, and I won't answer it now. It is the fifth customer, a real customer, and it will grow. They are on their own multi-year journey with their XPU. That's all we will say about it.

As for what you mentioned about OpenAI, we appreciate that this is a multi-year journey that will last until 2029, as shown in the press release we issued with OpenAI, reaching 10 gigawatts between 2026 and more like 2027, 28, 29. Ben, not 2026, more like 27, 28, 29, 10 gigawatts. That’s the discussion about OpenAI. I call it an agreement, which is a consensus we reached with our respected and valued customer OpenAI regarding the direction of development. But we do not expect much contribution in 2026.

Host

The next question comes from CJ Muse of Cantor Fitzgerald.

Question: C.J Muse

Yes, good afternoon. Thank you for the question. Chen Fuyang, I want to talk about custom chips, and perhaps discuss how you expect the content value from Broadcom to grow generation by generation. As part of this, your competitors have announced the CPX product, which essentially provides re-acceleration for accelerators supporting ultra-large context windows. I'm curious if you think this provides a broader opportunity for your existing five customers to have multiple XPU products? Thank you very much.

Answer: Chen Fuyang (CEO)

Thank you. Yes, you are hitting the nail on the head. The benefit of custom accelerators is that you are trying to avoid a "one-size-fits-all." Generation by generation, these five customers can now create their own versions of XPU custom accelerator chips for training and inference, which are basically two parallel tracks running almost simultaneously. So I will have many versions to deal with. I don't need to create more versions. We already have a lot of different content just by creating these custom accelerators.

**By the way, when you make custom accelerators, you tend to incorporate more unique, differentiated hardware rather than trying to make it work in software and create kernels in software. I know that is tricky too, but think about the difference; you can create those sparse core data routers in hardware instead of handling dense matrix multipliers in the same tool. This is just one of many examples of what creating custom accelerators allows us to do **

Alternatively, for the same customer, the memory capacity or memory bandwidth between different chips may also vary, simply because even in inference, you want to do more reasoning rather than decoding or other tasks, such as pre-filling. So you really start to create different hardware for the different aspects of how you want to train or infer and run workloads. This is a very fascinating field, and we have seen a lot of changes, developing multiple chips for each customer.

Host

The next question comes from Harsh Kumar of Piper Sandler.

Question: Harsh Kumar

Yes, Chen Fuyang and team. First of all, congratulations on achieving some pretty amazing numbers. I have a simple question and a more strategic question. The simple one is, Chen Fuyang and Kirsten, your guidance for the AI business requires nearly $1.7 billion in sequential growth. I’m curious if you could talk about how this growth is distributed among the existing three customers, is it fairly balanced, all growing, or is one customer driving most of the growth?

Then, Chen Fuyang, strategically, one of your competitors recently acquired a photonic fabric company. I’m curious about your thoughts on this technology; do you see it as disruptive, or do you think it’s just hype at this stage?

Answer: Chen Fuyang (CEO)

I like the way you framed your question because it almost hesitates for me. Thank you. Regarding your first part, yes, we are driving growth, and we are starting to feel that this thing is endless, which is a real mix of existing customers and existing XPUs. A large part of this is the XPUs we are seeing. This does not diminish the fact that, as I pointed out in my speech, there is demand for switches, not only for Tomahawk 6 but also for Tomahawk 5 switches. The demand for our latest 1.6 Tbps DSP, which enables optical interconnect for scale-out, is extremely strong. Extending from this, the demand for optical components like lasers and PINs is simply insane.

All of this adds up. Now, when you compare it to XPUs, all of this is relatively small in amount, you might guess. No, to give you a sense, perhaps let me look at it from the perspective of backlog orders. Of the $73 billion AI revenue backlog I mentioned for the next 18 months, perhaps $20 billion is from everything else. The rest is from XPUs. I hope this gives you an idea of the mix. But the rest is still $20 billion, which is by no means a small number. So we value it.

So when you talk about your next question regarding silicon photonic technology, as a way to create better, more efficient, and lower power interconnects, not only for scale-out but also hoping for scale-up. Yes, I can see at some point in the future when silicon photonic technology becomes the only viable solution, it will become important We are not there yet, but we have the technology and continue to develop it.

Even when we developed it for 400 gigabits and then for 800 gigabits bandwidth, the market was not ready. So even with the product, we are now working on 1.6 terabits bandwidth to create silicon photonic switches and silicon photonic interconnects. We are not even sure it will be fully deployed because, you know, engineers, our engineers, our peers, and our peers out there will always find ways to achieve vertical scaling with copper wires in the rack for as long as possible, and vertical scaling in pluggable optical modules. The last straw is when you can't do it well in pluggable optical modules, and of course, you can't do it well with copper wires, then you are right, you will turn to silicon photonic technology, it will happen, we are ready, just want to say it won't be soon.

Host

The next question comes from Karl Ackerman of BNP Paribas.

Question: Karl Ackerman

Yes, thank you. Chen Fuyang, could you talk about your supply chain resilience and visibility with key material suppliers, especially as you support not only existing customer projects but also the two new custom computing processors you announced this quarter? I mean, you also happen to serve a large part of the network and computing AI supply chain, and you mentioned record backlog orders. If you were to point out some bottlenecks, what areas are you working to address and mitigate supply chain bottlenecks? How do you think the situation will improve by 2026? Thank you.

Answer: Chen Fuyang (CEO)

Bottlenecks are usually all-encompassing. In some ways, we are very fortunate to have product technology and business lines to create multiple key cutting-edge components to support today's most advanced AI data centers. As I mentioned earlier, our DSP now reaches 1.6 Tbps. This is the leading connection bandwidth for top-tier XPUs and even GPUs. We intend to maintain this position. We also have lasers to match, EML, VCSEL, CW lasers.

So we are fortunate to have all of these along with the key active components that go with them. We recognized this early and scaled capacity in design to match demand. This is a long answer, but what I want to say is that among all the rack suppliers for these data center systems, excluding power enclosures and all those things, now that starts to exceed our scope, like power enclosures, transformers, and gas turbines.

If you only look at the racks for AI systems, we might have a good grasp of where the bottlenecks are because sometimes we ourselves are part of the bottleneck, and then we find ways to solve it. So we feel quite good about the situation by 2026.

Host

The next question comes from Christopher Rolland of Susquehanna Question: Christopher Rolland

Hi, thanks for the question. First, a clarification, and then my question. Sorry to come back to this issue again. But if I understand correctly, Chen Fuyang, I think you are saying that the agreement with OpenAI is a framework agreement. So it may not be binding, similar to the agreements with NVIDIA and AMD. Secondly, you mentioned that non-AI semiconductor revenue is flat. What is happening in that area? Is there still an inventory surplus? What is needed to get it growing again? Do you ultimately think that business will see growth? Thank you.

Answer: Chen Fuyang (CEO)

Well, regarding non-AI semiconductors, we are seeing that the broadband business is indeed recovering very well. But we are not seeing the same situation in other businesses. No, what we see is stability, and we have not seen a sustainable sharp recovery. So I guess it will take another one or two quarters, but we have not seen any further deterioration in demand. I think maybe AI is sucking the oxygen out of spending in other areas of enterprises and hyperscale data centers. We have not seen the situation worsen, nor have we seen it recover quickly, except for the broadband business. That’s a simple summary of the non-AI business.

Regarding OpenAI, I won’t go into details, I just want to tell you what that 10-gigawatt statement is about. Additionally, our collaboration with them on custom accelerators has progressed to a very advanced stage and will happen soon. There will be a commitment element to this. But what I previously articulated was that 10-gigawatt statement. That 10-gigawatt statement is an agreement aimed at reaching consensus on developing 10 gigawatts of capacity for OpenAI during the period from 2027 to 2029. This is different from the XPU project we are developing with them.

Host

The last question comes from Joe Moore at Morgan Stanley.

Question: Joseph Moore

Great, thank you very much. If you have $21 billion in rack revenue in the second half of 2026, I want to ask, will we maintain that run rate after that? Will you continue to sell racks? Or will this business mix change over time? I actually just want to clarify how much of your 18-month backlog is currently full systems.

Answer: Chen Fuyang (CEO)

That’s an interesting question. This question basically boils down to how much computing power our customers will need in the future, say over an 18-month period. Your guess might be as good as mine, based on the public information we know, which is what really matters. But if they need more, then you will see that continue, perhaps even at a larger scale. If they don’t need it, then maybe it won’t. But we want to indicate that this is the demand we are currently seeing for that period.

Host

Now I would like to turn the call back to Ji Yoo (Head of Investor Relations) for closing remarks.

Ji Yoo (Head of Investor Relations)

Thank you, host. This quarter, Broadcom will present at the New Street Research Virtual AI Big Ideas Conference on December 15, 2025 (Monday). Broadcom currently plans to report its Q1 FY2026 earnings after the market closes on March 4, 2026 (Wednesday). The public webcast of Broadcom's earnings call will take place at 2 PM Pacific Time. This concludes today's earnings call. Thank you all for participating. Host, you may end the call now.

Host

That concludes today's agenda. Thank you all for participating. You may now disconnect