Challenge OpenAI for months! Google releases the more efficient Gemini 3 Flash, the default model for apps, enhancing search capabilities upon launch

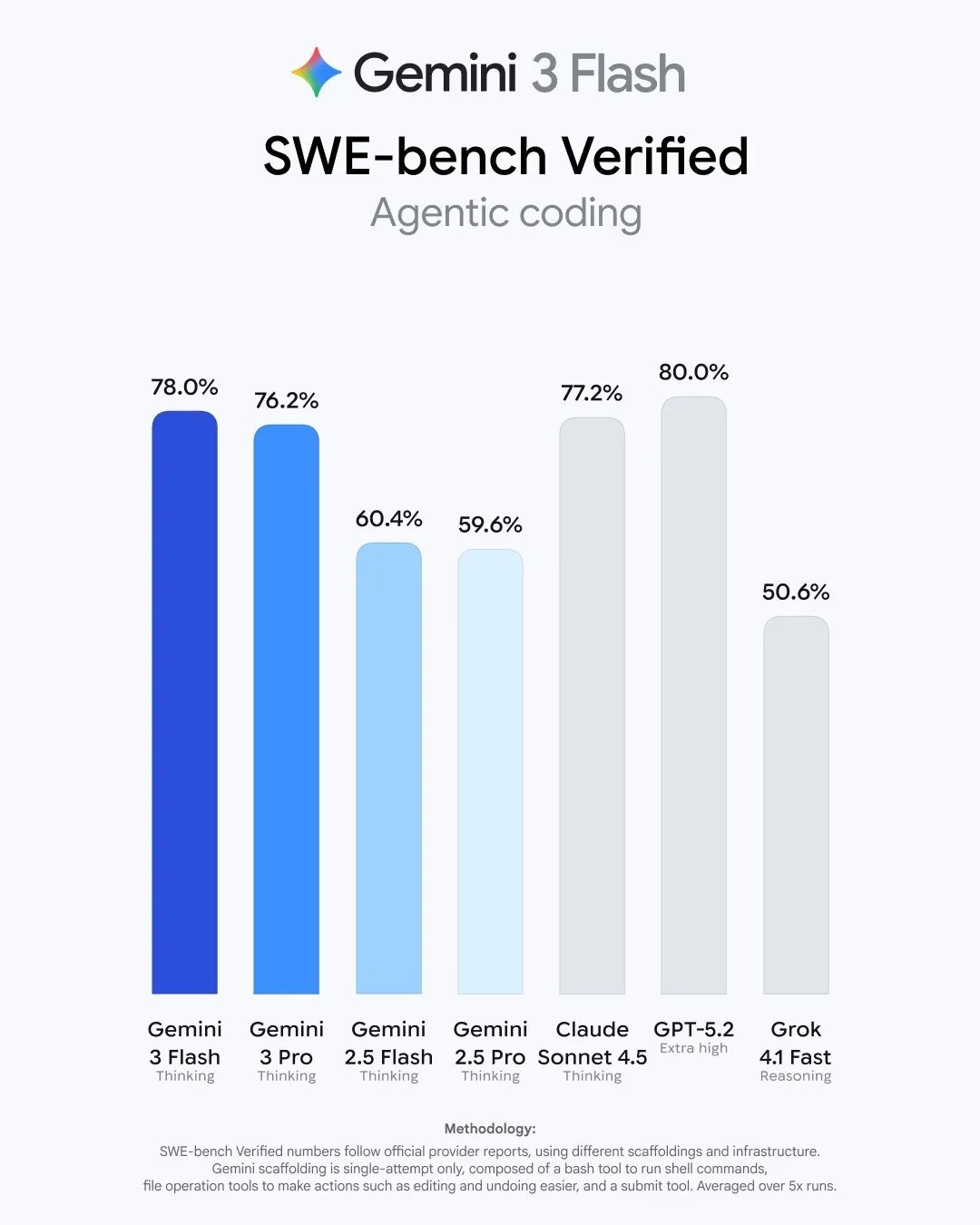

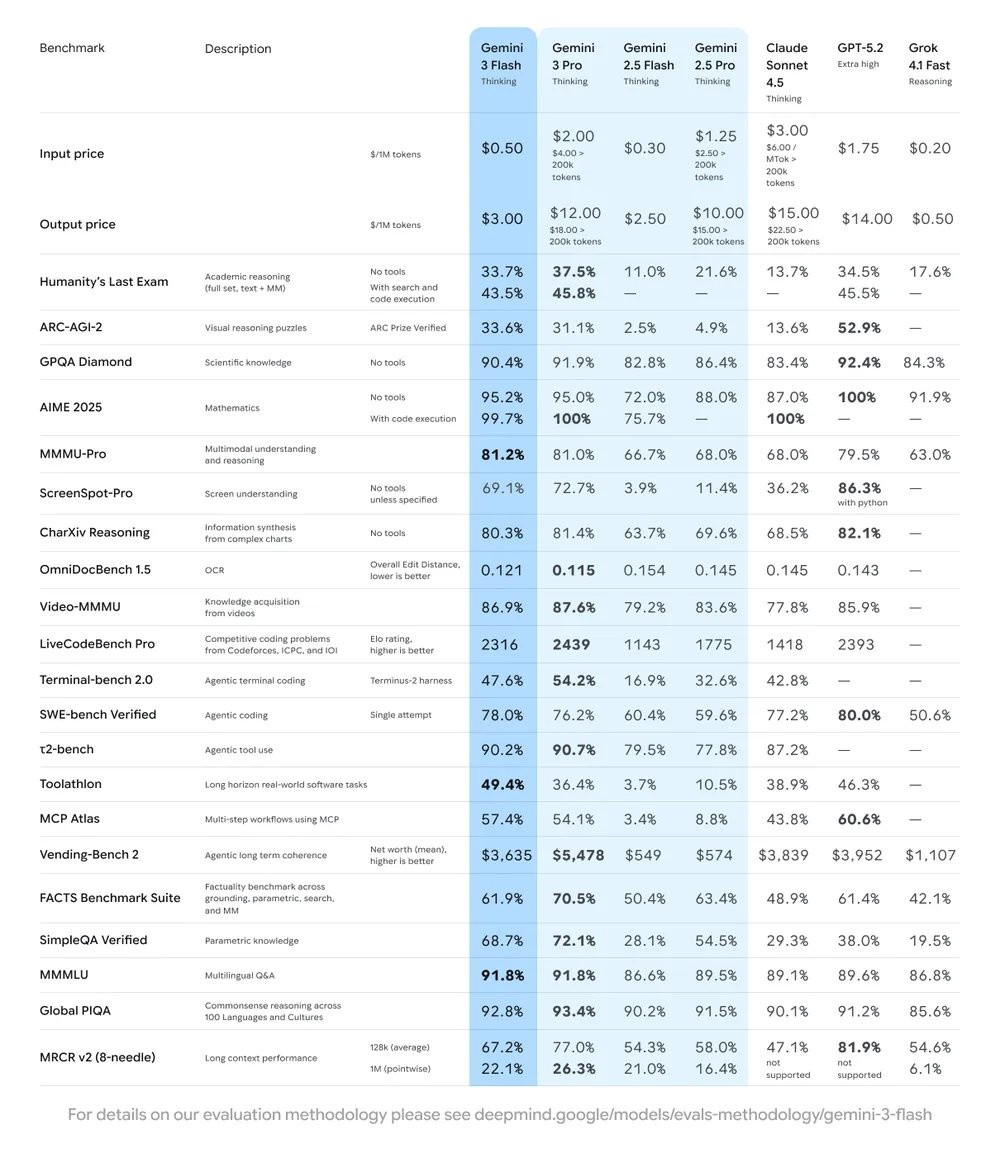

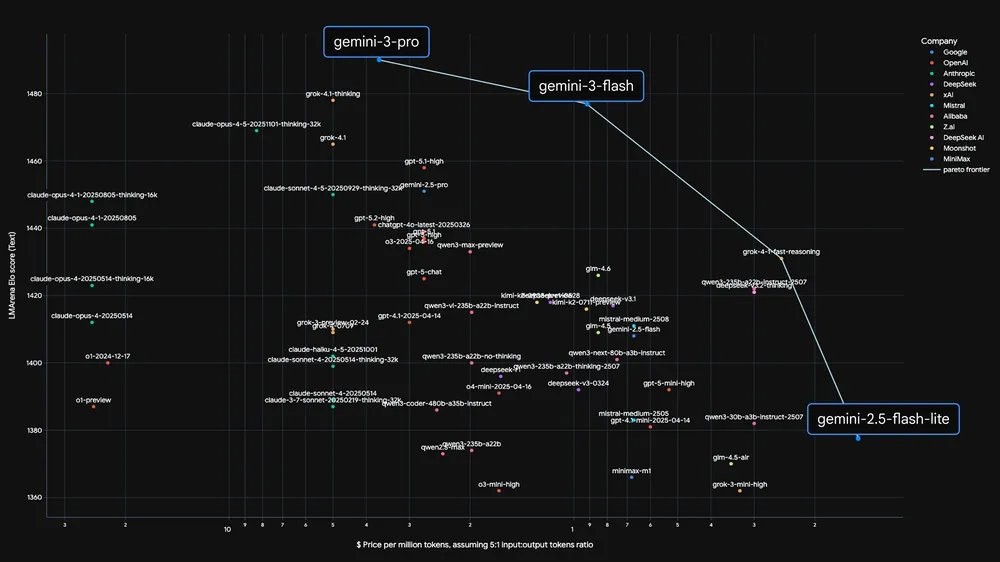

評估代理編程能力的基準測試中,Gemini 3 Flash 的得分甚至高於 Gemini 3 Pro。新 Flash 模型保持了接近 Gemini 3 Pro 的推理能力,同時運行速度達到 Gemini 2.5 Pro 的三倍,成本僅為 Gemini 3 Pro 的四分之一;定價 0.5 美元/百萬輸入 token 、3.00 美元/百萬輸出 token,略高於 Gemini 2.5 Flash,但性能超越 2.5 Pro,速度是後者三倍。

旗下最強模型 Gemini 3 Pro 發佈才過一個月,谷歌就進一步向 OpenAI 發起挑戰。

美東時間 17 日週三,谷歌宣佈推出 Gemini 3 家族的新成員 Flash。這款主打快速高效的新模型發佈當天即取代 Gemini 2.5 Flash 成為 Gemini App 的默認模型,並同步成為谷歌搜索 AI 模式的默認驅動系統,標誌着谷歌在 AI 競賽中的分發優勢正轉化為實質性的市場攻勢。

谷歌正將其最強 AI 能力以更低成本、更快速度推向全球數百萬用户。谷歌 DeepMind 的 Gemini 產品管理高級總監 Tulsee Doshi 稱,谷歌將 Flash 定位為老黃牛式的模型。該模型保持了接近 Gemini 3 Pro 的推理能力,同時運行速度達到 Gemini 2.5 Pro 的三倍,成本僅為 Gemini 3 Pro 的四分之一。

Doshi 表示:"幾周前我們發佈了 Pro,對市場反響感到興奮。通過 Gemini 3 Flash,我們將這個模型帶給所有人。" 他還提到,評估代理編程能力的基準測試 SWE-bench Verified 顯示,Gemini 3 Flash 性能還優於 Gemini 3 Pro。

Gemini 3 Flash 的發佈正值谷歌與 OpenAI 競爭的日趨白熱化。谷歌 11 月 18 日發佈的 Gemini 3 系列促使 OpenAI 本月初拉響紅色警報。上週報道稱,Gemini 在每週移動應用下載量、月活躍用户和全球網站訪問量等指標上的增長率最近均超過 ChatGPT,但 ChatGPT 11 月下旬仍壟斷 90% 的移動端會話。上週 OpenAI推出 GPT-5.2 迎戰,本週二又發佈新的圖像生成模型GPT Image 1.5。

業內人士認為,這場日益演變為谷歌與 OpenAI 二元對抗的 AI 競賽不僅對人工智能(AI)技術本身,也對整個經濟產生重大影響。這種持續不斷的發佈週期反映了模型競賽前沿領域殘酷的競爭本質,任何公司都可能迅速從領先者淪為陪跑者。雖然 OpenAI 擁有先發優勢,但 Gemini 在搜索和谷歌核心應用中的分發能力更為廣泛。自 Gemini 3 發佈以來,谷歌 API 每天處理的 token 數量已超過 1 萬億。

性能持平旗艦模型 編程能力超越同門 Pro

Gemini 3 Flash 在多項基準測試中展現出接近甚至超越更大型模型的表現。

在 SWE-bench Verified 基準測試中,Gemini 3 Flash 的解決率達到 78%,僅次於 GPT-5.2 的 80%,不僅超越 Gemini 2.5 系列,甚至優於同門的 Gemini 3 Pro,Pro 的準確率為 76.2%。

在跨領域專業知識測試 Humanity's Last Exam 中,Gemini 3 Flash 在不使用工具的情況下得分 33.7%,雖低於 Gemini 3 Pro 的 37.5% 和 GPT-5.2 的 34.5%,但遠超 Gemini 2.5 Flash 的 11%。

在多模態推理基準測試 MMMU-Pro 中,Gemini 3 Flash 以 81.2% 的得分超越包括 Gemini 2.5 和 Gemini 3 Pro 在內的所有競爭對手。

在博士級推理和知識基準 GPQA Diamond 上,Gemini 3 Flash 的得分為 90.4%。雖然低於 GPT-5.2 的 92.4% 和 Gemini 3 Pro 的 91.9%,但其他對手得分還都不到 90%。

Doshi 對媒體表示:“我們將 Flash 定位為更像老黃牛的模型。從輸入和輸出價格來看,Flash 從成本角度來説是便宜得多的產品,這實際上允許許多公司進行批量任務。”

成本優勢明顯 速度是 2.5 Pro 三倍

Gemini 3 Flash 的定價為每 100 萬輸入 token 0.50 美元,每 100 萬輸出 token 3.00 美元。雖然略高於 Gemini 2.5 Flash 的每 100 萬輸入 token 0.30 美元和輸出 token 2.50 美元,但谷歌表示,新一代 Flash 模型性能超越 Gemini 2.5 Pro,速度卻達到其三倍。

更重要的是,在處理需要思考的任務時,Gemini 3 Flash 平均使用的 token 數量比 2.5 Pro 少 30%。這意味着儘管單位價格略高,但在某些任務中用户的總體 token 消耗量會減少,最終實現成本節約。

Gemini 3 Flash 在 Gemini API 和 Vertex AI 平台中還配備標準上下文緩存功能,能夠在重複使用 token 達到一定閾值的應用中實現高達 90% 的成本削減。

谷歌強調,Gemini 3 Flash 能夠以不到 Gemini 3 Pro 四分之一的成本支持 AI 代理工作流,同時提供更高的速率限制。

全面覆蓋產品線 橋水、Salesforce 等企業客户已 “嚐鮮”

Gemini 3 Flash 即日起面向全球用户推出,覆蓋消費者、開發者和企業三大羣體。在 Gemini App 中,所有全球用户可免費使用該模型,用户仍可從模型選擇器中切換至 Pro 模型處理數學和編程問題。在谷歌搜索的 AI 模式中,Gemini 3 Flash 成為默認模型,美國用户還可訪問更強大的 Gemini 3 Pro 進行深度思考任務。

對於開發者,該模型已通過 Google AI Studio、Gemini CLI、Vertex AI 和上月發佈的新代碼工具 Antigravity 提供預覽版本,也可通過 Android Studio 等開發工具訪問。企業用户可通過 Vertex AI 和 Gemini Enterprise 獲取服務。

谷歌介紹,多家知名企業已開始使用 Gemini 3 Flash 進行業務轉型,並得到他們的熱烈反響,這些企業認可該模型的推理速度、效率和推理能力,認為它與更大的模型不相上下。

據谷歌,軟件開發公司 JetBrains AI 工具生態系統負責人 Denis Shiryaev 表示:"在我們的 JetBrains AI Chat 和 Junie 代理編程評估中,Gemini 3 Flash 提供了接近 Gemini 3 Pro 的質量,同時推理延遲和成本顯著降低。"

全球最大對沖基金橋水(Bridgewater Associates)的 AIA Labs 負責人兼首席科學家 Jasjeet Sekhon 表示:“在橋水,我們需要能夠在不犧牲概念理解的情況下處理大量非結構化多模態數據集的模型。Gemini 3 Flash 是第一個以我們工作流程所需的速度和規模提供 Pro 級深度的模型。”

Salesforce、Workday、Figma、Cursor、Harvey 和 Latitude 等公司也已採用該模型。谷歌搜索產品副總裁 Robby Stein 表示,新 Flash 模型將幫助用户處理具有多個條件的更精細搜索,例如查找適合有幼兒的父母的晚間活動。