Behind SanDisk's Surge: Three Catalysts Resonating, NAND Becomes a "Necessity," AI Reassesses Storage Value

Jensen Huang ignited the first spark, systematically proposing the concept of ICMS and providing a clear judgment: context is becoming the new bottleneck for AI, rather than computing power itself, which creates new application scenarios for NAND; DeepSeek Engram has verified at the model level that NAND can serve as "slow memory." Meanwhile, ClaudeCode has amplified the rigid demand for long-term storage at the application level

In the past three weeks, the storage sector has experienced a rare "perfect storm."

SanDisk's stock price has risen by over 100%, and NAND-related stocks have collectively surged. On the surface, this appears to be a typical rebound in the storage cycle; however, a deeper analysis of the technological and demand changes since the beginning of the year reveals that this is more like a value reassessment triggered by the evolution of AI architecture.

From NVIDIA's introduction of a new inference storage architecture at CES to DeepSeek's release of the Engram model, and ClaudeCode's push for the accelerated implementation of "stateful AIAgent," three originally disparate technological paths are converging towards the same conclusion by early 2026:

Storage is transitioning from a "cost item" to a "core production factor" of AI.

Jensen Huang Ignites the First Spark: Context Becomes the Bottleneck, Storage Must Be Reconstructed

The uncontrollable growth of AI inference scale is forcing a reconstruction of computing systems.

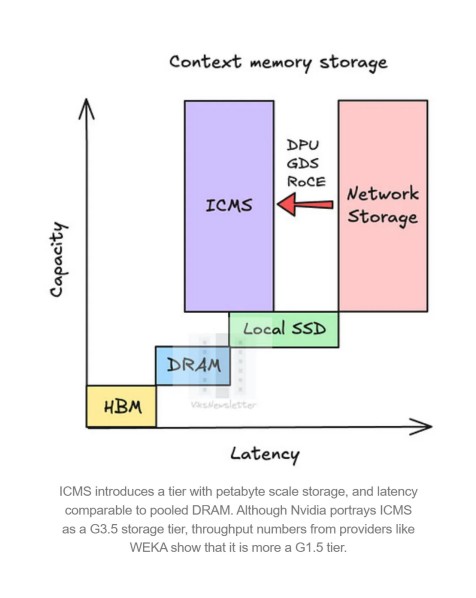

At CES 2026, NVIDIA CEO Jensen Huang systematically introduced the concept of ICMS (Inference Context Memory Storage) for the first time and provided a clear judgment: Context is becoming the new bottleneck for AI, rather than computing power itself.

As the model context window expands from hundreds of thousands of tokens to terabyte levels, the competition for HBM from KVCache and context memory is becoming unsustainable. On one hand, the unit cost of HBM3e is significantly higher than that of NAND; on the other hand, the CoWoS packaging capacity also imposes hard constraints on HBM supply.

NVIDIA's solution is not to "stack more GPUs," but to offload context from HBM.

In the newly released DGX Vera Rubin NVL72 SuperPOD architecture, NVIDIA has introduced independent storage racks specifically for inference context, in addition to computing and networking racks. These racks connect to the computing system via BlueField DPU and Spectrum-X Ethernet, essentially serving the role of "working memory."

From a demand estimation perspective, this change is not marginal:

- The additional NAND scale for each SuperPOD is approximately 9.6PB

- Converted to a single NVL72 computing rack, the incremental NAND is about 1.2PB

- If 100,000 NVL72 racks are shipped in SuperPOD form by 2027, it corresponds to an additional NAND demand of 120EB

In a global NAND market with an annual demand of approximately 1.1–1.2ZB, this represents nearly 10% of structural new demand. More critically, this demand comes directly from AI infrastructure, rather than traditional consumer electronics.

DeepSeek Engram: NAND is Used as "Slow Memory" for the First Time

If NVIDIA solves the engineering architecture problem, then DeepSeek's Engram model vindicates NAND at the algorithmic level.

The core breakthrough of Engram lies in Deterministic Lookup. Unlike the dynamic routing of MoE or dense Transformers, Engram can accurately determine which memory segments need to be accessed based on the input tokens before the computation begins, thus completing prefetching in advance.

In traditional models, only ultra-low latency memory like HBM can support uncertain access paths; however, Engram's deterministic prefetch mechanism effectively "masks" the latency gap between SSDs and HBM.

DeepSeek's paper has verified:

- An embedding table with 100 billion parameters can be completely offloaded to host memory

- Performance loss is less than 3%

- As the model size increases, 20-25% of the parameters are naturally suitable to become "unloadable static memory"

What does this mean?

This means that NAND is no longer just "cold data storage," but for the first time has been systematically integrated into a tiered memory system, becoming AI's "slow RAM," specifically designed to carry a vast, low-frequency but indispensable knowledge base.

In terms of cost, the unit price of NAND is still significantly lower than that of DDR and HBM; once it possesses "irreplaceability" in model architecture, its strategic value in data centers will be repriced.

Morgan Stanley analyst Shawn Kim and his team believe that DeepSeek demonstrates a technological path of "Doing More With Less." This hybrid architecture approach not only alleviates resource constraints on high-end AI computing power in practical terms but also proves to the global market that efficient storage-computation synergy may be more cost-effective than simply expanding computing power.

ClaudeCode: AI transitions from "stateless" to "stateful," with storage needs increasing exponentially

The third catalyst comes from the application layer.

The emergence of ClaudeCode marks AI's evolution from a "dialogue tool" to a long-running agent. Unlike one-time text generation, code-writing AI needs to:

- Read and modify files repeatedly

- Debug and backtrack multiple times

- Maintain session states over several days

The essence of this type of AI is a "stateful system" with long-term working memory.

And this working memory clearly cannot reside long-term in expensive GPU HBM.

The combination of BlueField DPU and NAND provides a cost-controllable solution: the agent's session state and historical context can reside in the NAND layer instead of occupying computing resources.

This means that as the penetration rate of AI agents increases, the demand function for storage will decouple from the number of inference calls and instead be linked to "state duration"—this represents a completely new growth logic

Why SanDisk? Why Now?

Three technological paths will simultaneously land in early 2026, forming a highly persuasive conclusion:

- NVIDIA has created new application scenarios for NAND at the hardware architecture level.

- DeepSeek has validated the feasibility of NAND as "slow memory" at the model level.

- ClaudeCode has amplified the rigid demand for long-term storage at the application level.

This is not a short-term boost from a single customer or product, but a signal that the AI architecture is changing.

In this context, SanDisk's stock performance is no longer just a reflection of the "storage cycle rebound," but rather the market is beginning to re-understand a question: What is the true infrastructure in the AI era?

When NAND is simultaneously driven by cyclical recovery, long-term demand, and structural re-evaluation, its pricing logic will naturally undergo a leap. This may be the true reason behind SanDisk's surge